After floods and schedules worked against me last year to prevent a trip to Siding Spring, I’ve been lucky enough to sit in on some remote observing work as a last ‘official’ part of my Synapse residency project.

I joined Instrumentation Scientists Dr. Doris Grosse and Dr. Michael Copeland for three nights, controlling the 2.3 metre telescope at Siding Spring Observatory from here in Canberra. While observing, Doris and Michael work from about 7pm until 6am in two shifts – I was relieved I did not also have to become nocturnal and was able to grasp the scope of what they were doing between 7.30 and 9pm each night.

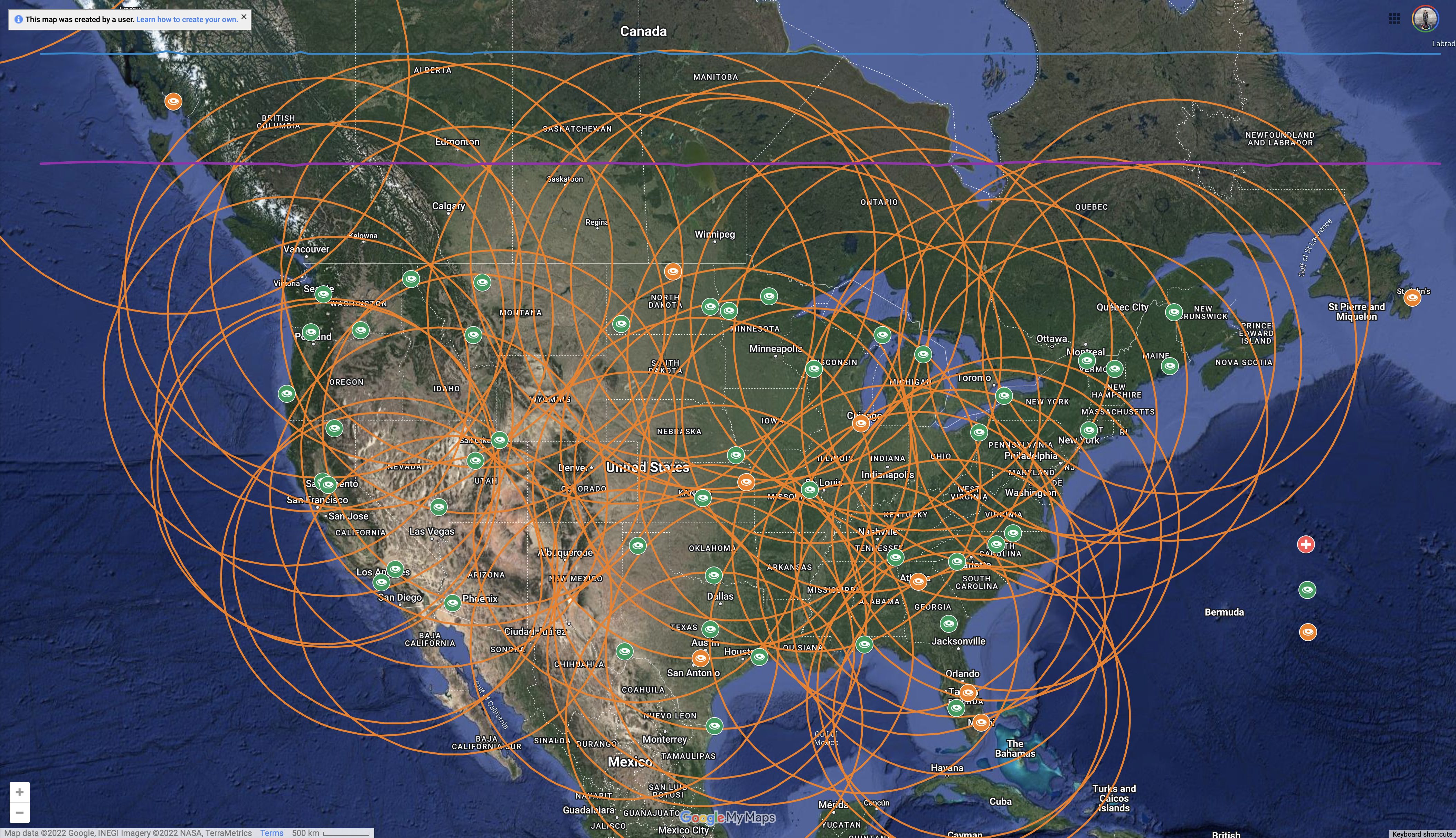

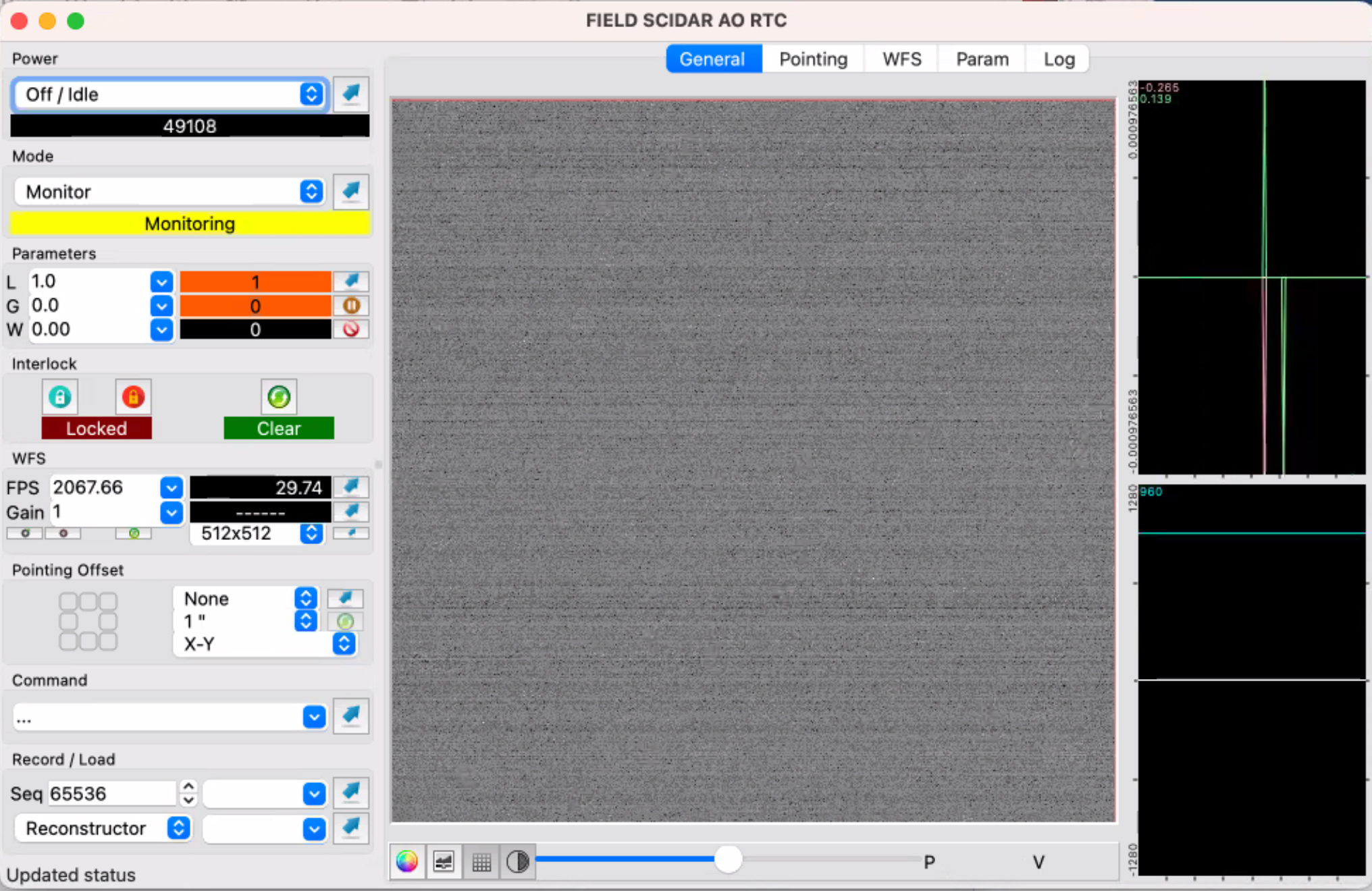

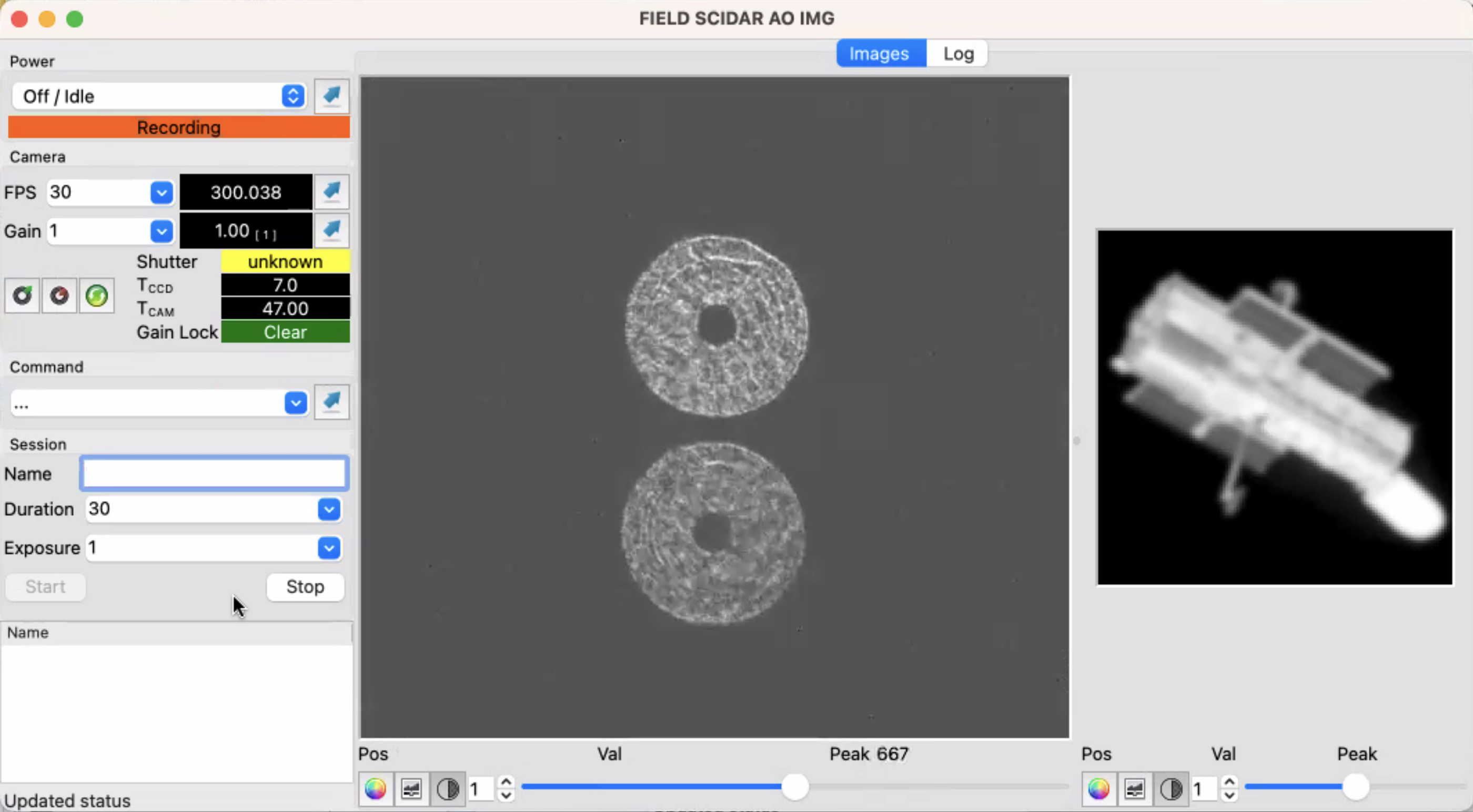

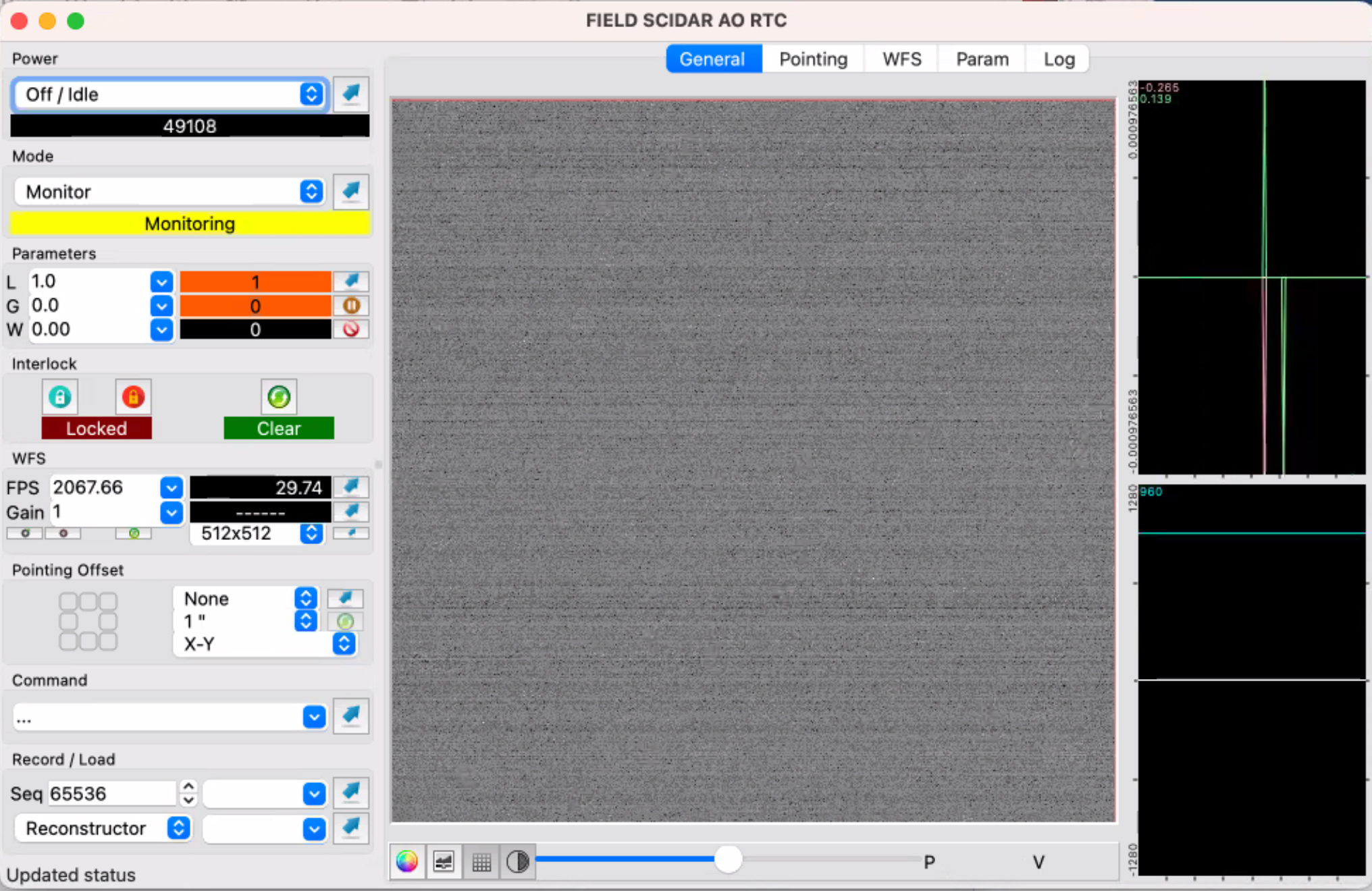

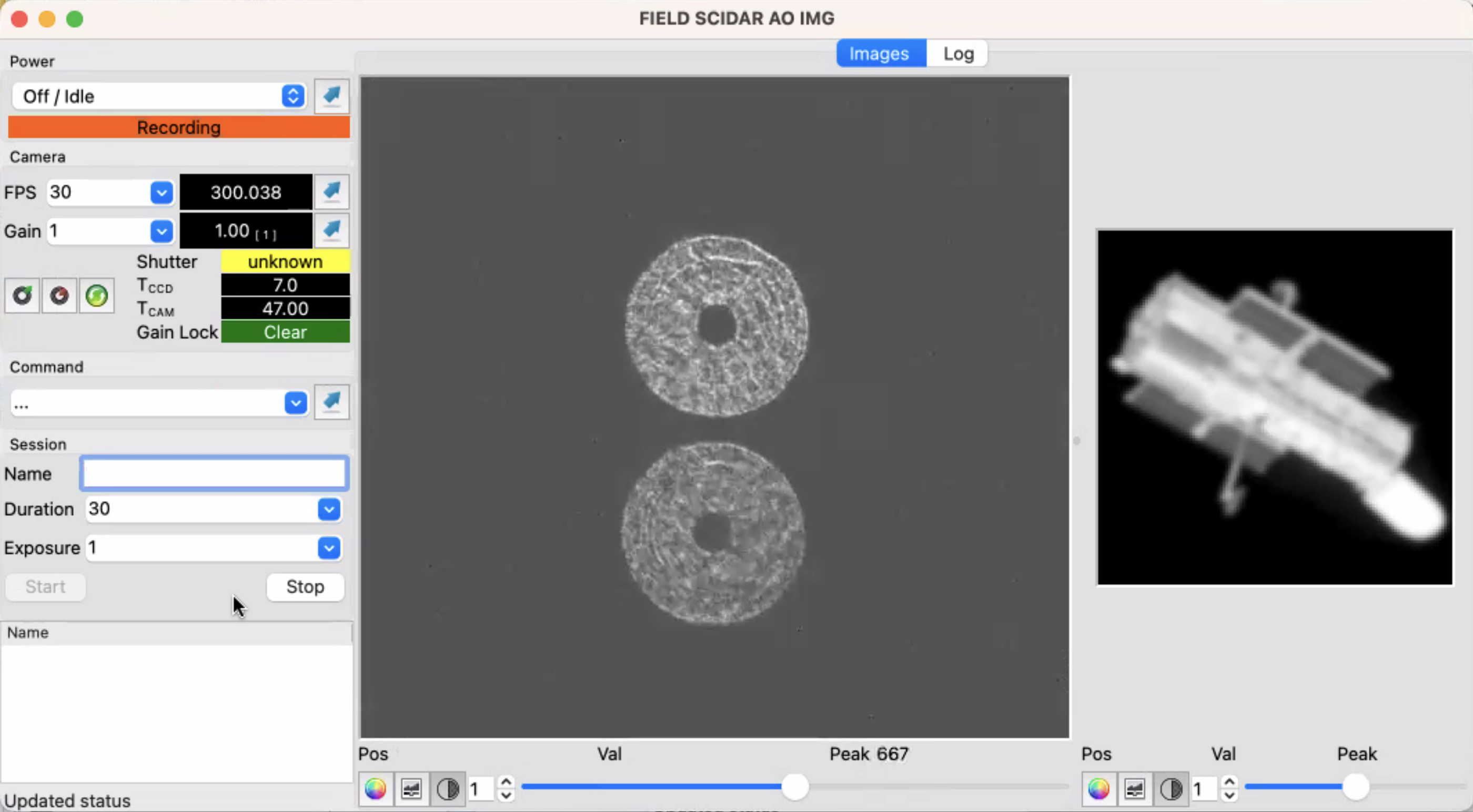

These observations were one part of an ongoing project researching atmospheric turbulence, and building an atmospheric turbulence profiler. To do this, double stars are observed using a stereo-SCIDAR (Scintillation Detection and Ranging) technique, which detects and correlates the scintillation of two stars to measure their atmospheric turbulence profiles.

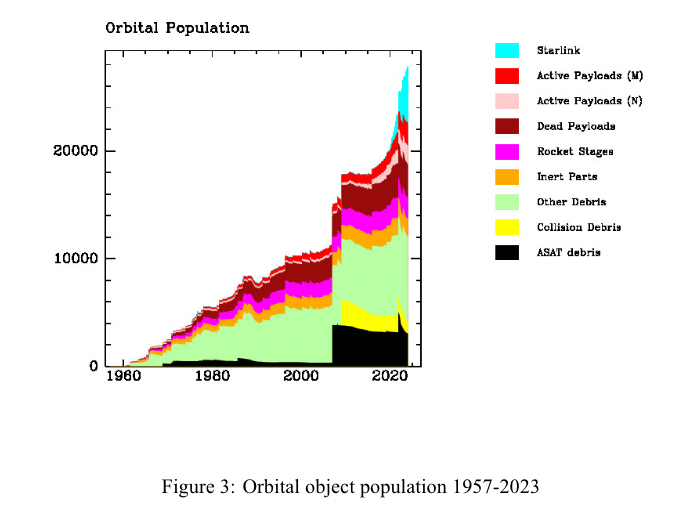

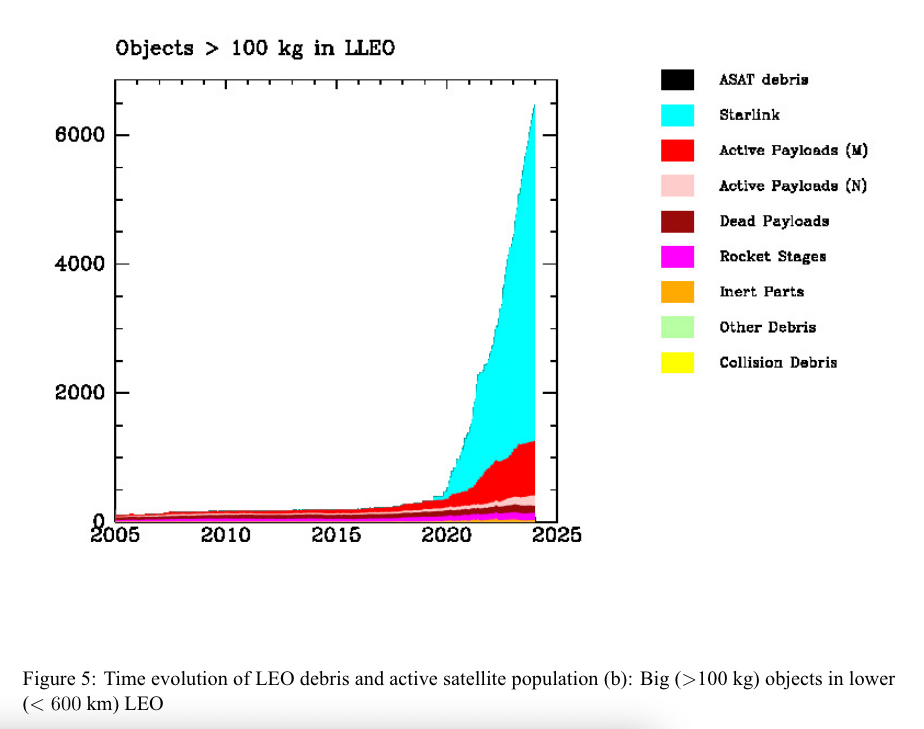

Atmospheric turbulence is caused by elements such as wind and weather systems that cause air currents of different temperatures to collide. When this happens, the light from stars is distorted, which is what causes them to ‘twinkle’. Understanding atmospheric turbulence allows scientists to create adaptive optic (AO) systems for telescopes that counteract distortions in data. These systems are crucial for obtaining clear, high resolution data and a major capability of systems that track and visualise satellites and space debris. This is important for scientific research but also for all other stakeholders of orbital space that I have previously been looking at – space situational awareness, commercial satellite operators, military agencies, so these few nights were an incredibly valuable opportunity to bring together divergent aspects of my Synapse project.

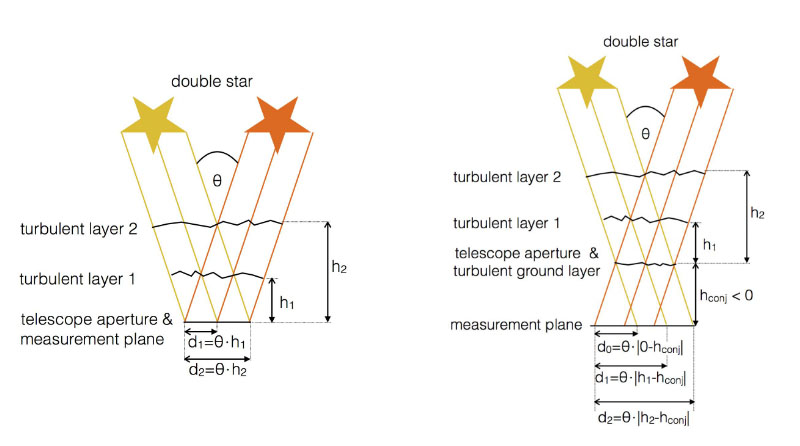

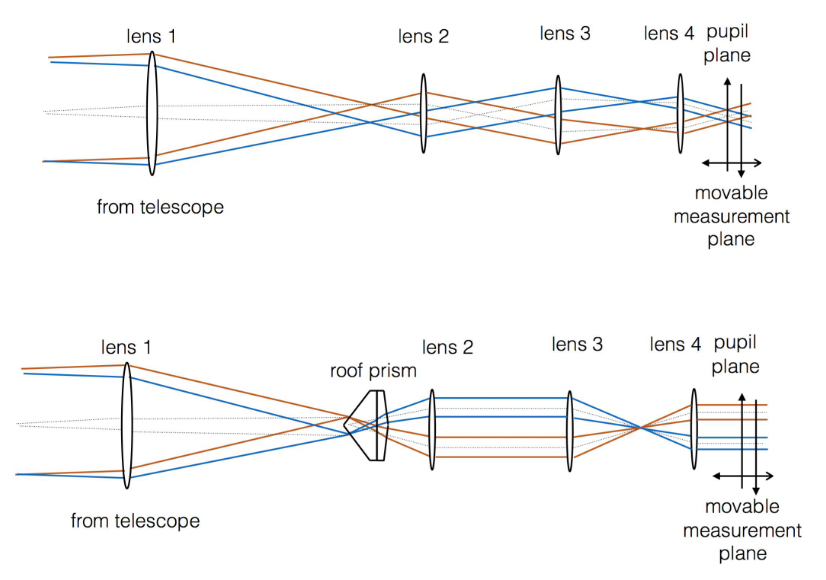

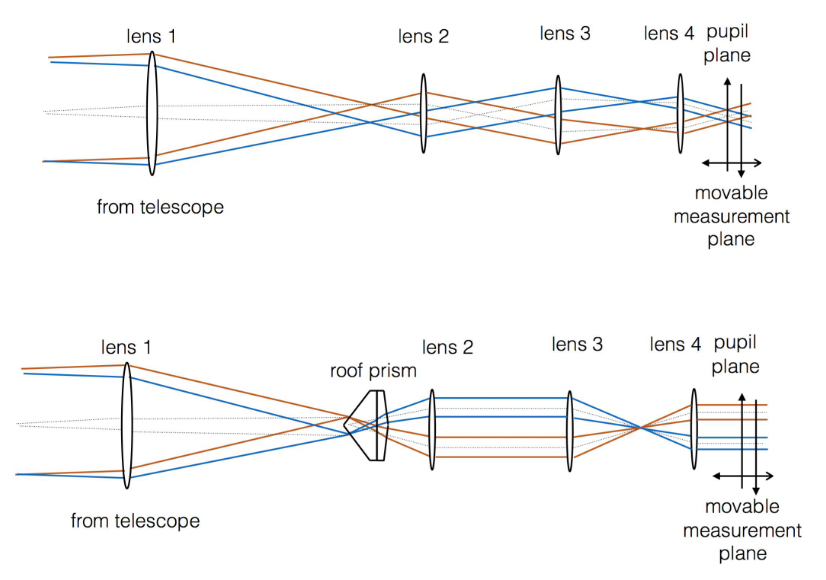

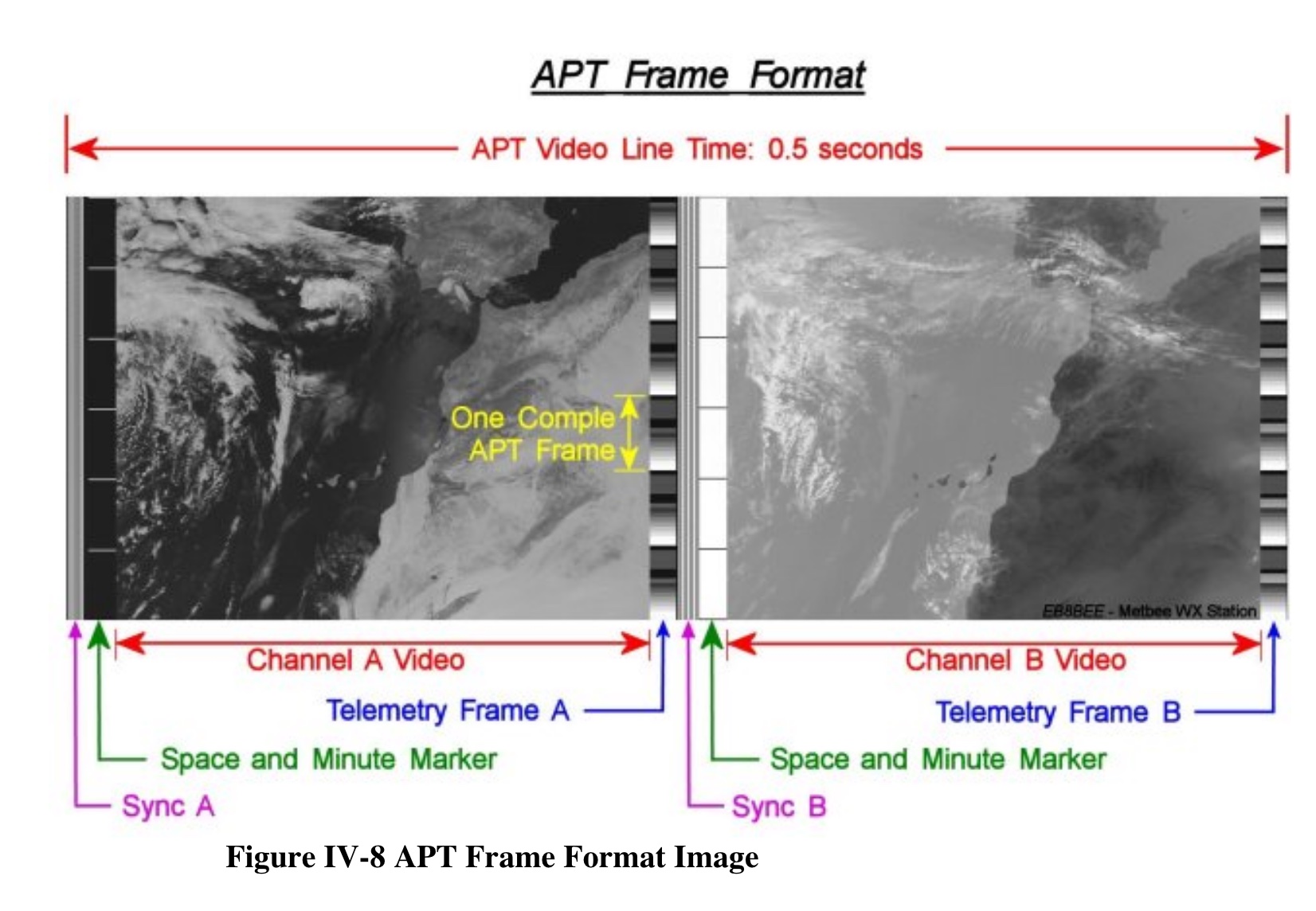

I met with Doris prior to the first observing night, who explained the fundamental elements of the project to me. One of the features of this project in particular is that it uses a single-detector stereo-SCIDAR technique rather than conventional SCIDAR. In general SCIDAR, the recorded images of the double star beams overlap. Conventional stereo-SCIDAR techniques have been developed previously to separate the images through the use of two detectors in the telescope system, but such systems require expensive and complex optical equipment. In this project, the addition of a prism in the optical design of the telescope refracts the beams of the double star in a way that separates the images onto the same detector on the measurement plane.

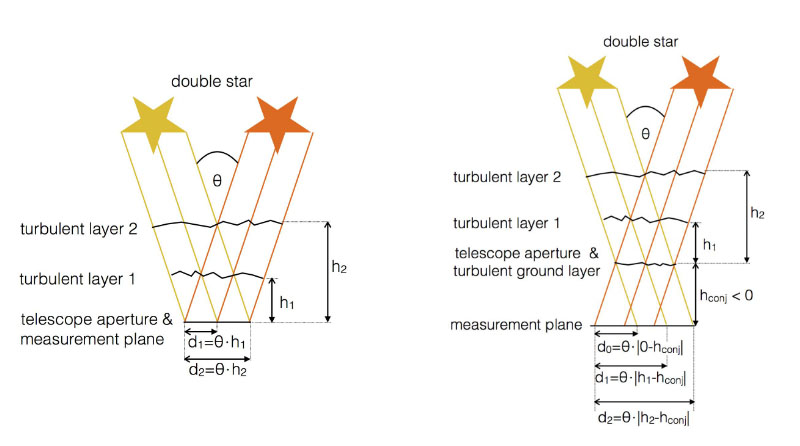

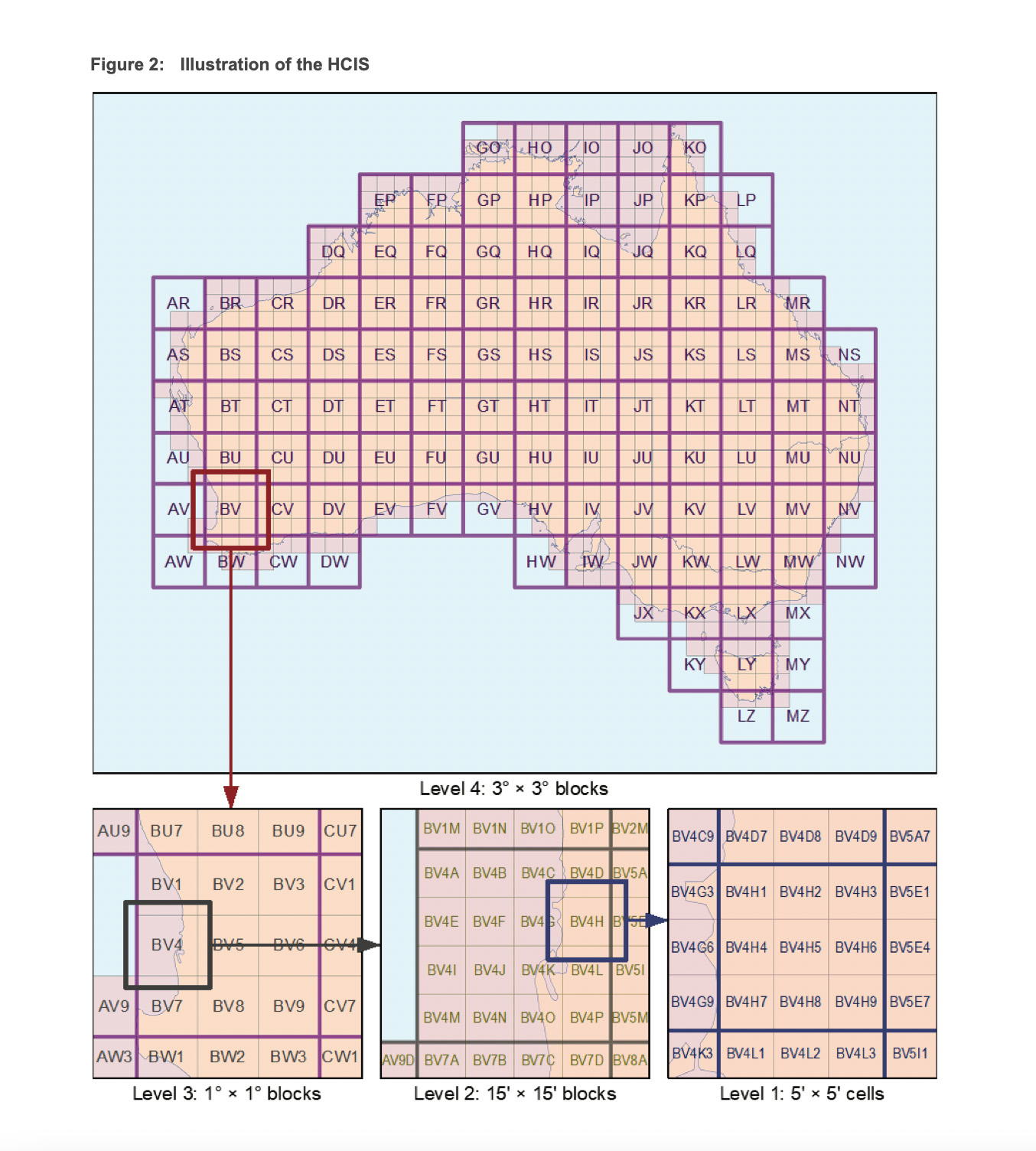

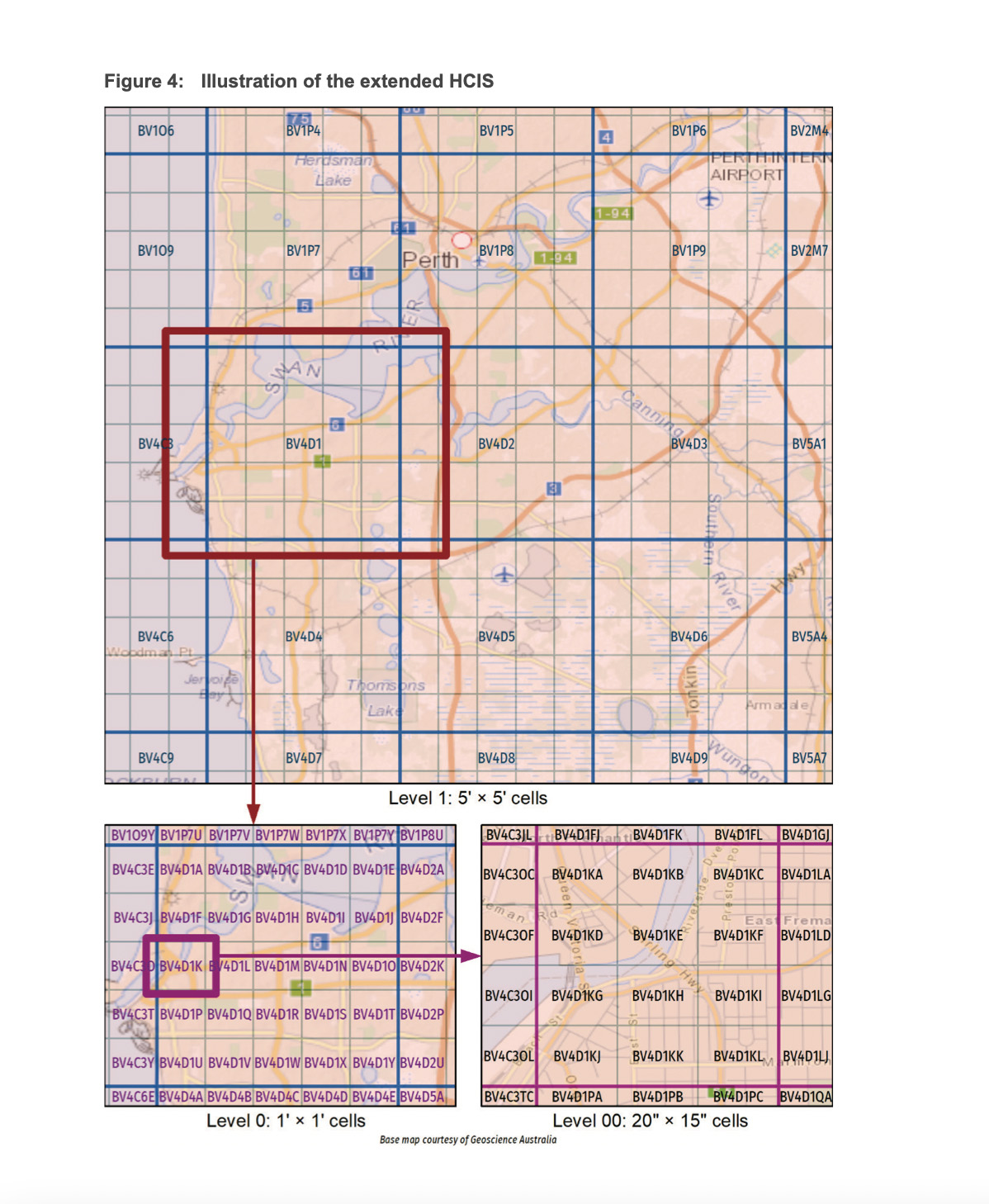

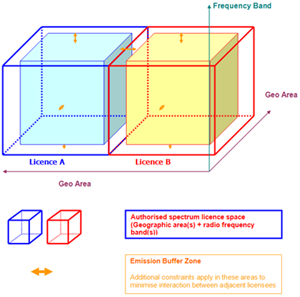

The figures below compare the two systems and show that the stereo technique acts to extend the distance between the telescope aperture and measurement plane, as though projecting the images to a negative altitude. The research led by Doris et al at the Advanced Instrumentation and Technology Centre at the Research School of Astronomy and Astrophysics (RSAA) at Mt Stromlo is novel in that it can capture high resolution data at high altitude, being able to measure effectively up to 20km into the atmosphere, using a single detector.

Above: Principle of conventional SCIDAR (left) – where the ground layer turbulence can’t be analysed, compared to generalised SCIDAR (right) – where the ground layer can be analysed due to the measurement plane at a negative altitude.

Schematic of the optical design of generalised SCIDAR (top) compared to stereo-SCIDAR with the addition of a roof prism (below).

Schematic of the optical design of generalised SCIDAR (top) compared to stereo-SCIDAR with the addition of a roof prism (below).

Figures: Grosse, Doris, Francis Bennet, Visa Korkiakoski, Francois Rigaut, and Elliott Thorn. “Single Detector Stereo-SCIDAR for Mount Stromlo.” edited by Enrico Marchetti, Laird M. Close, and Jean-Pierre Véran, 99093D. Edinburgh, United Kingdom, 2016. https://doi.org/10.1117/12.2232149.

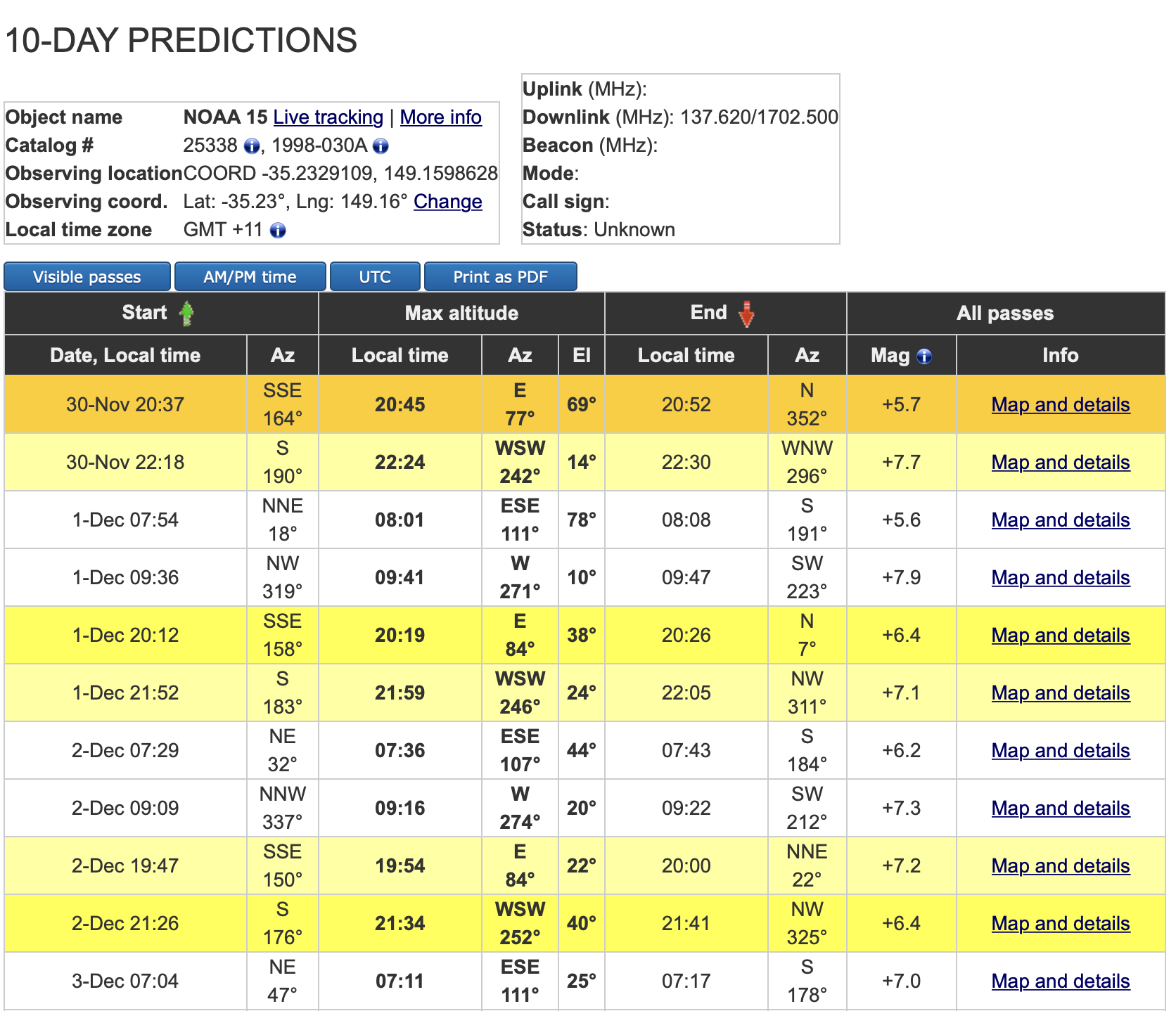

Night 1

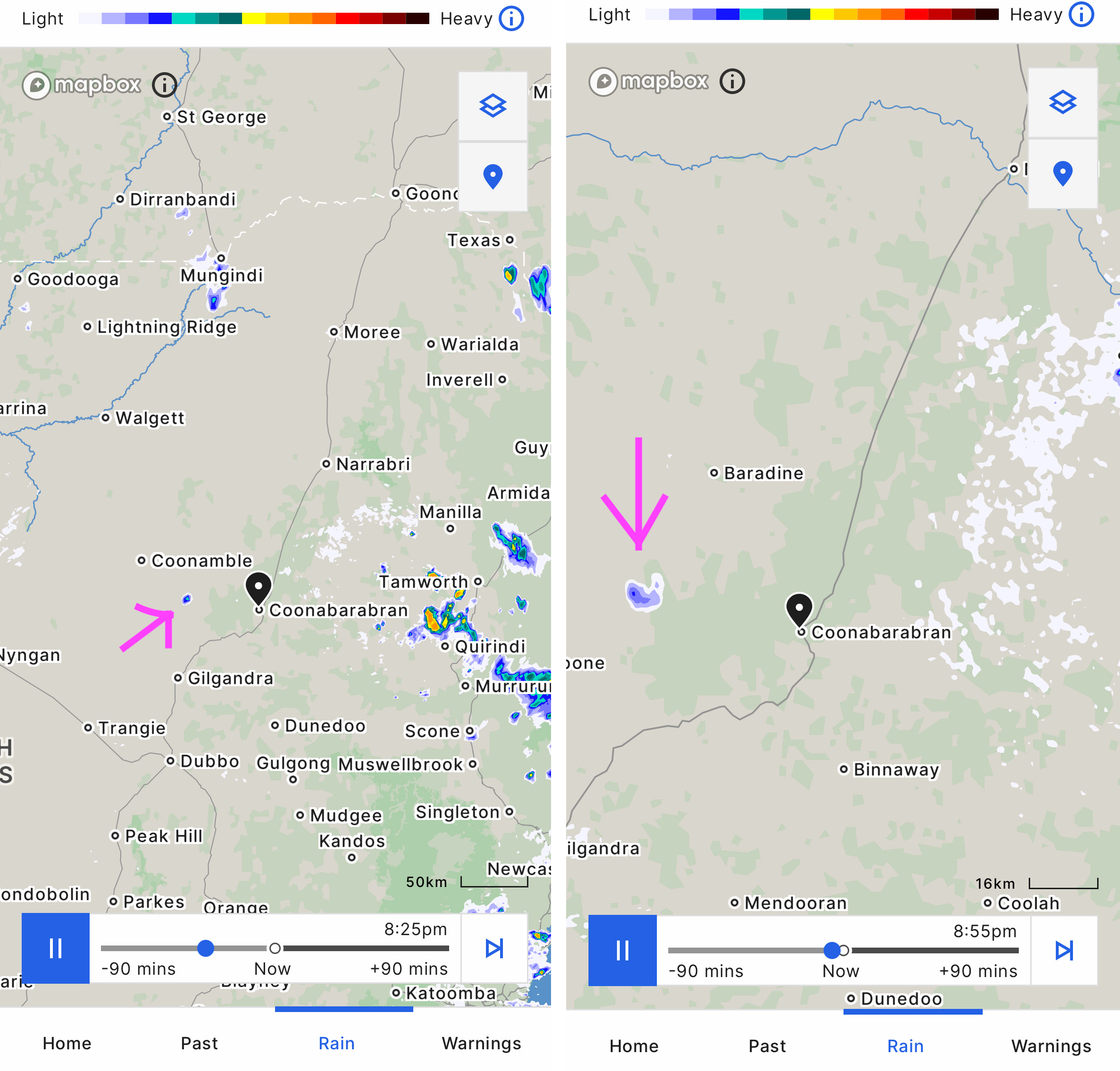

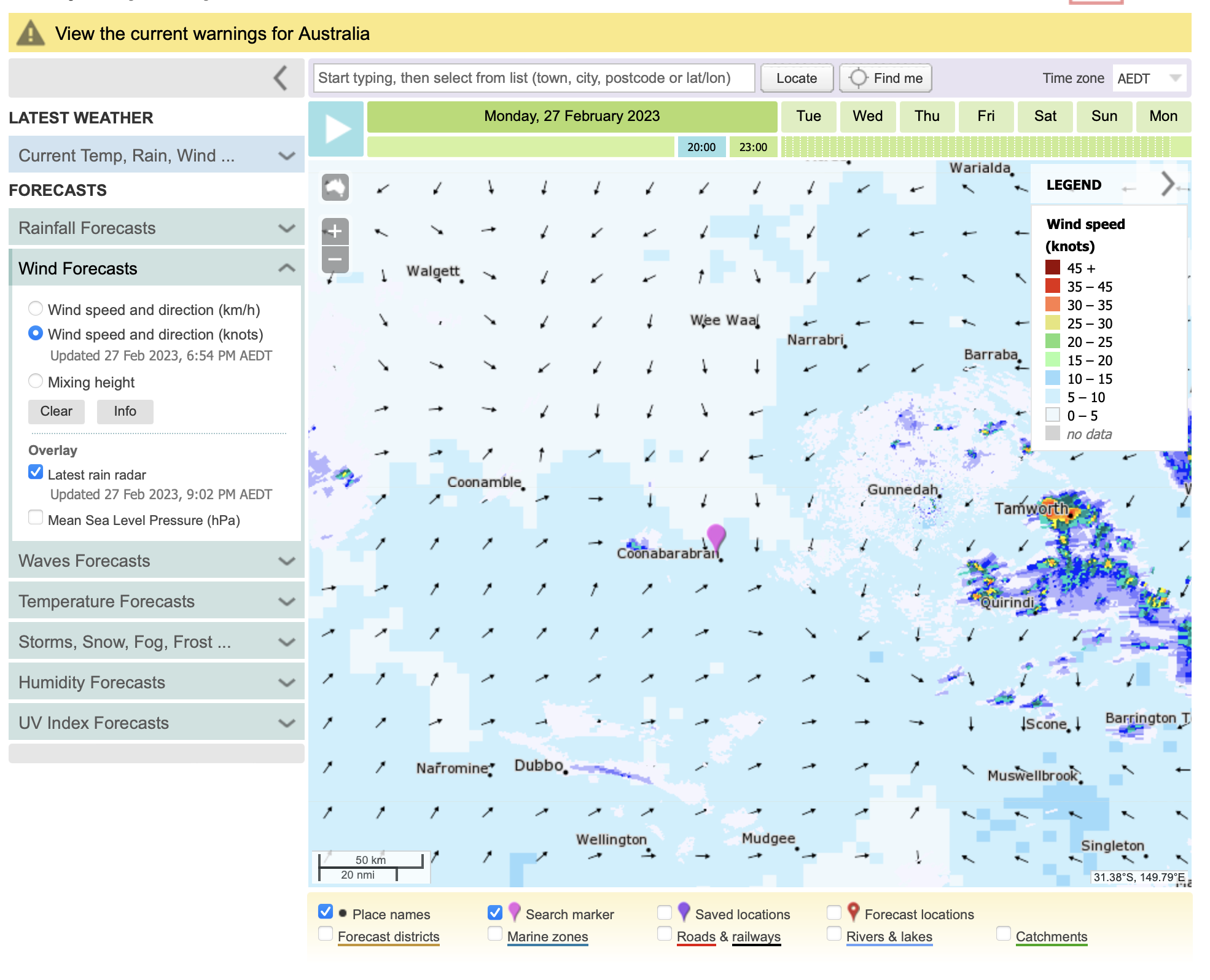

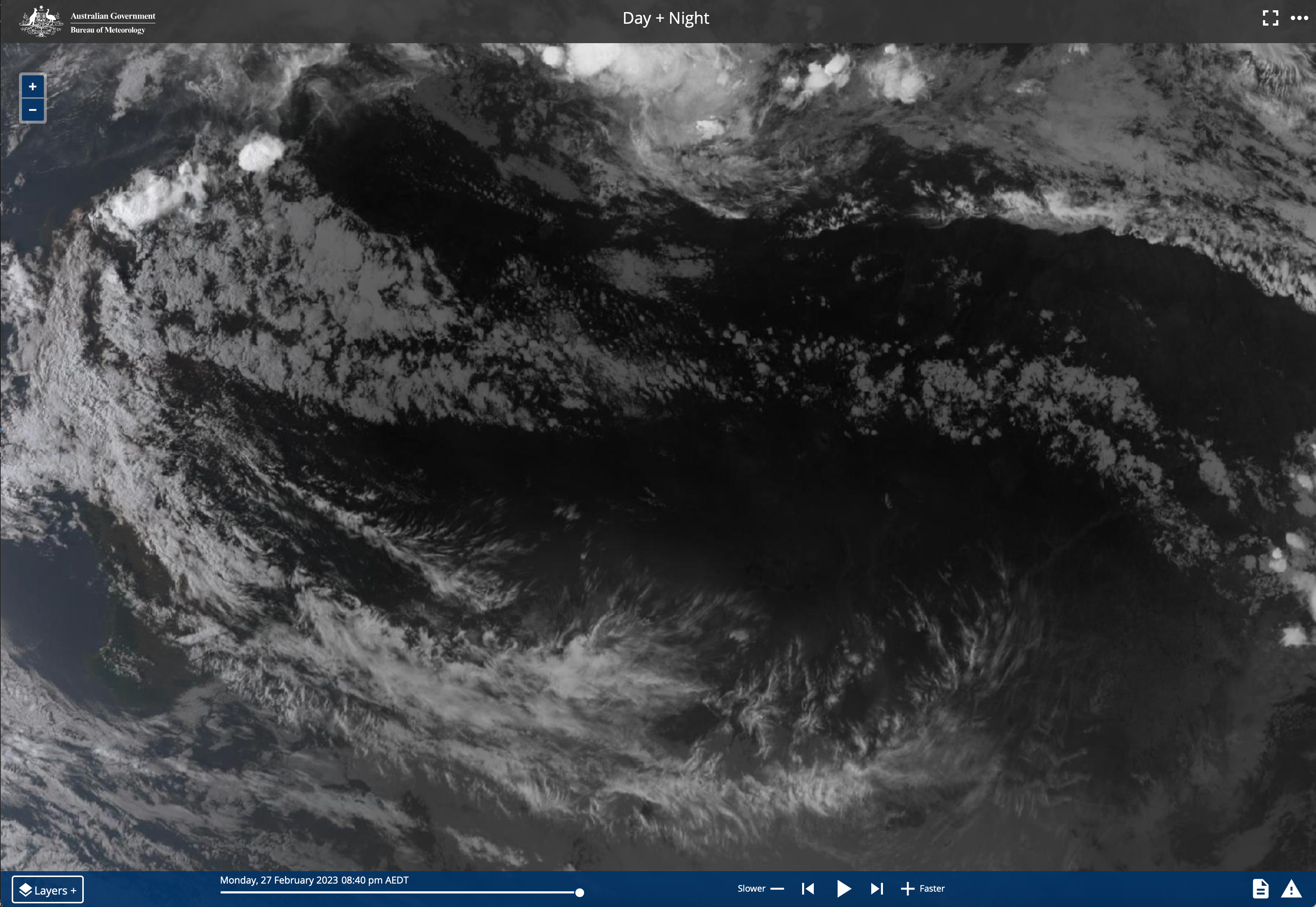

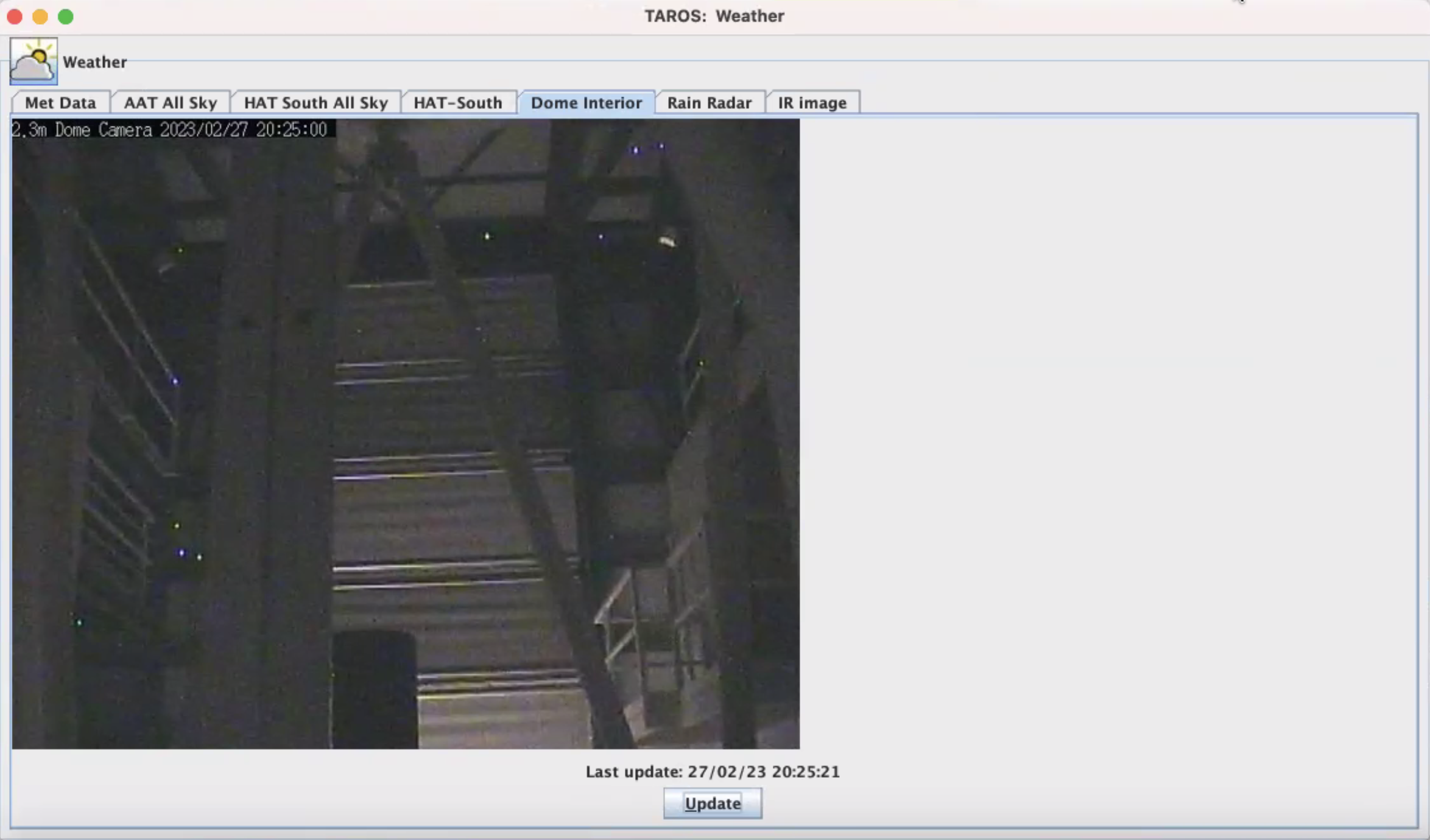

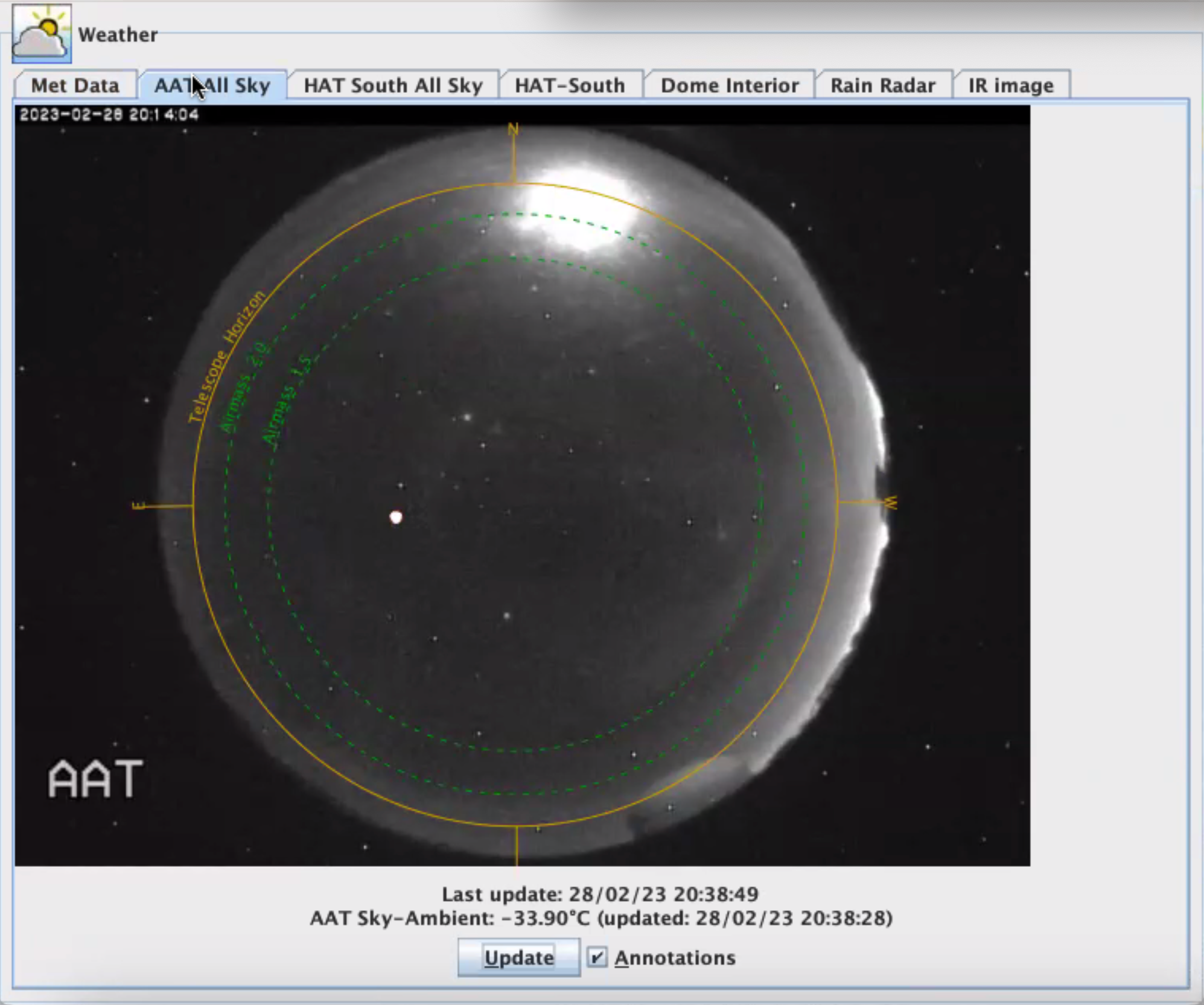

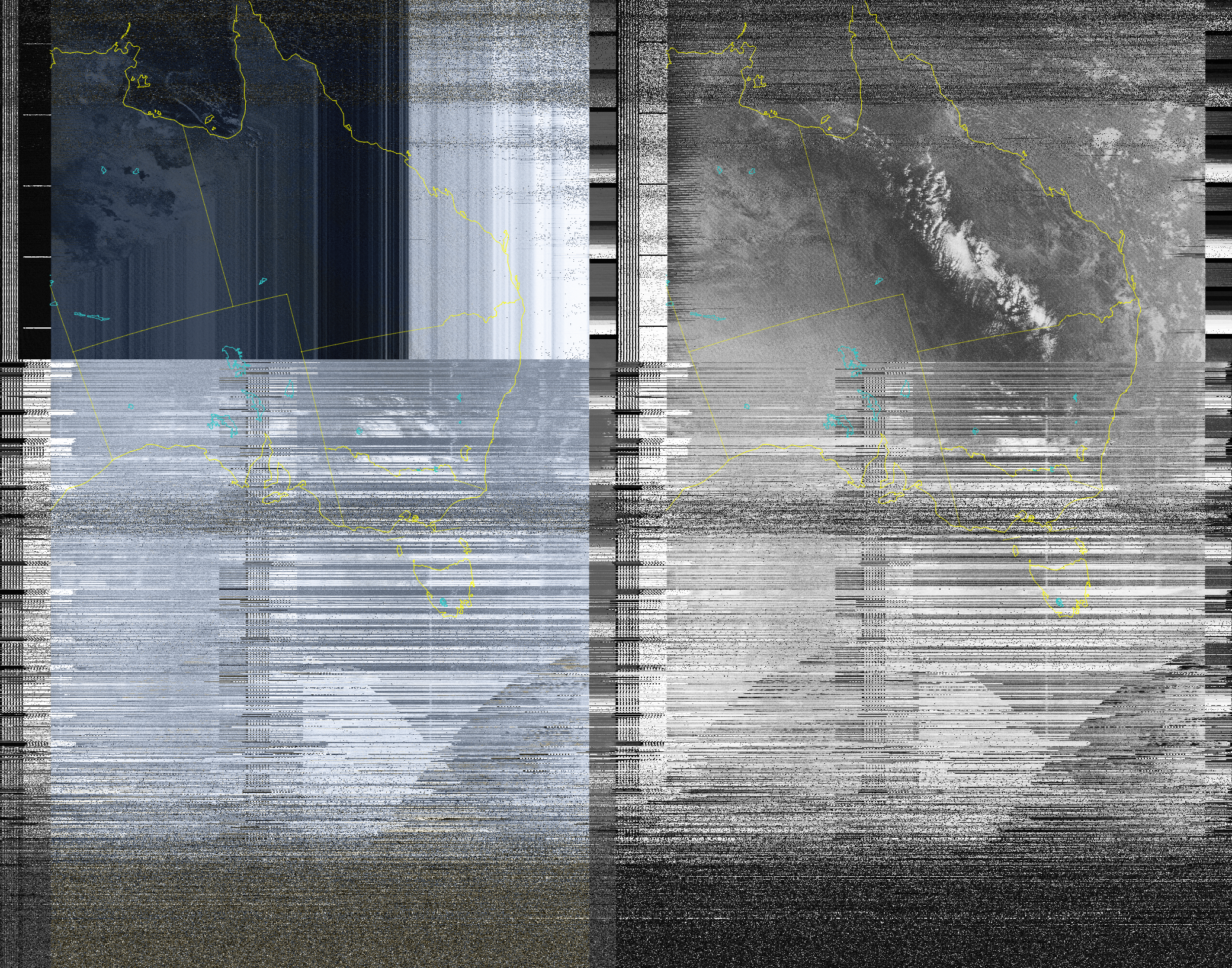

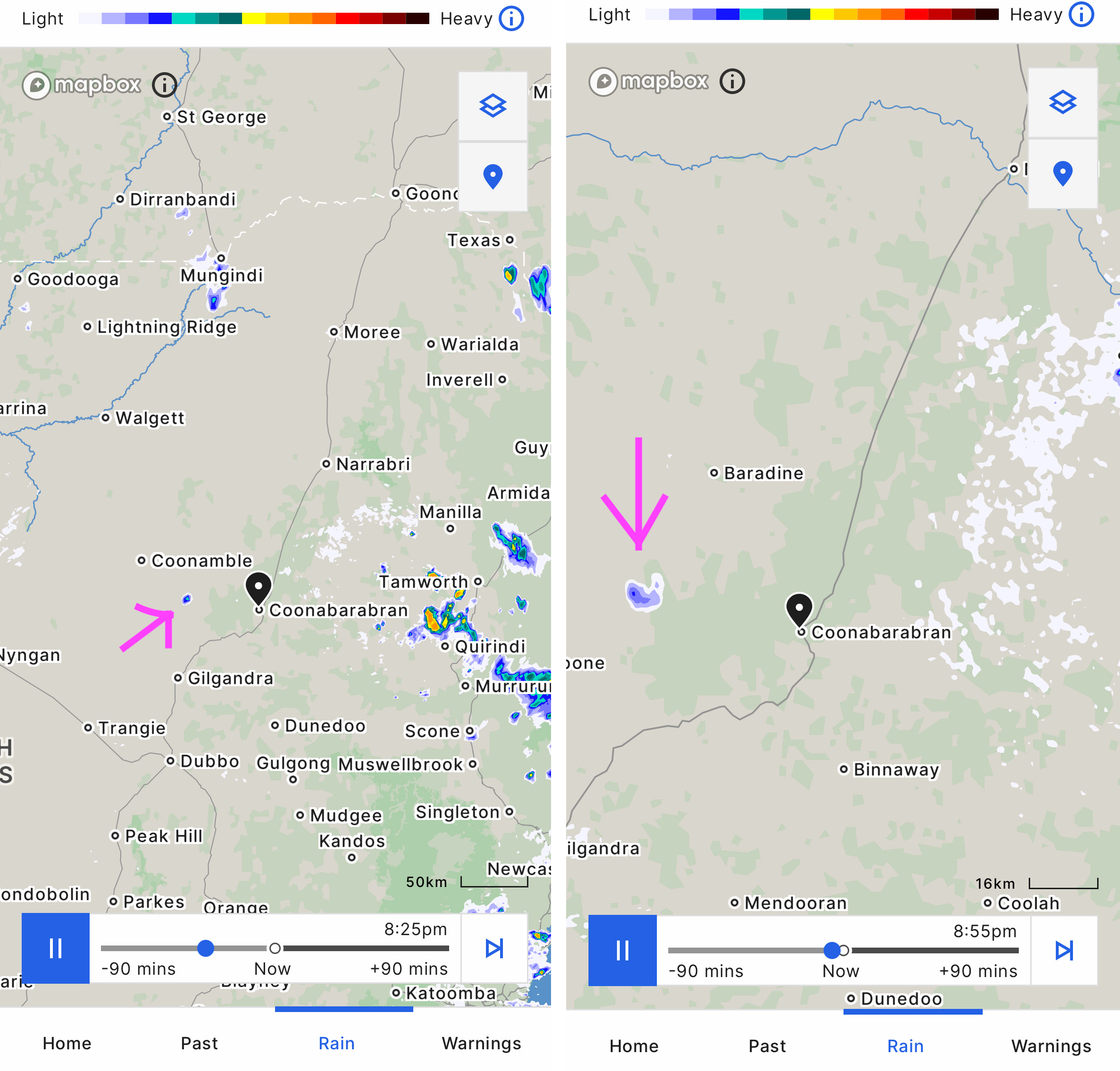

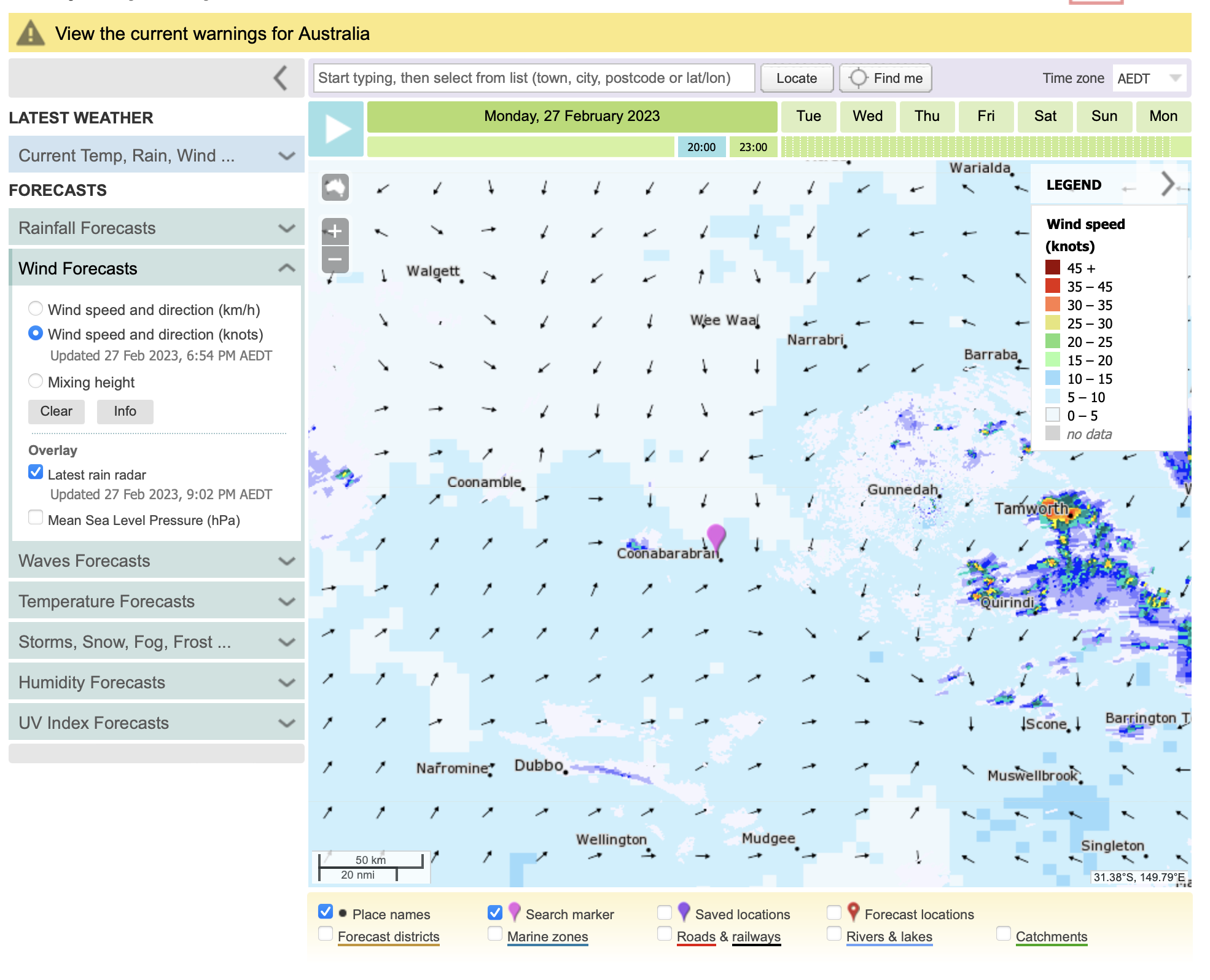

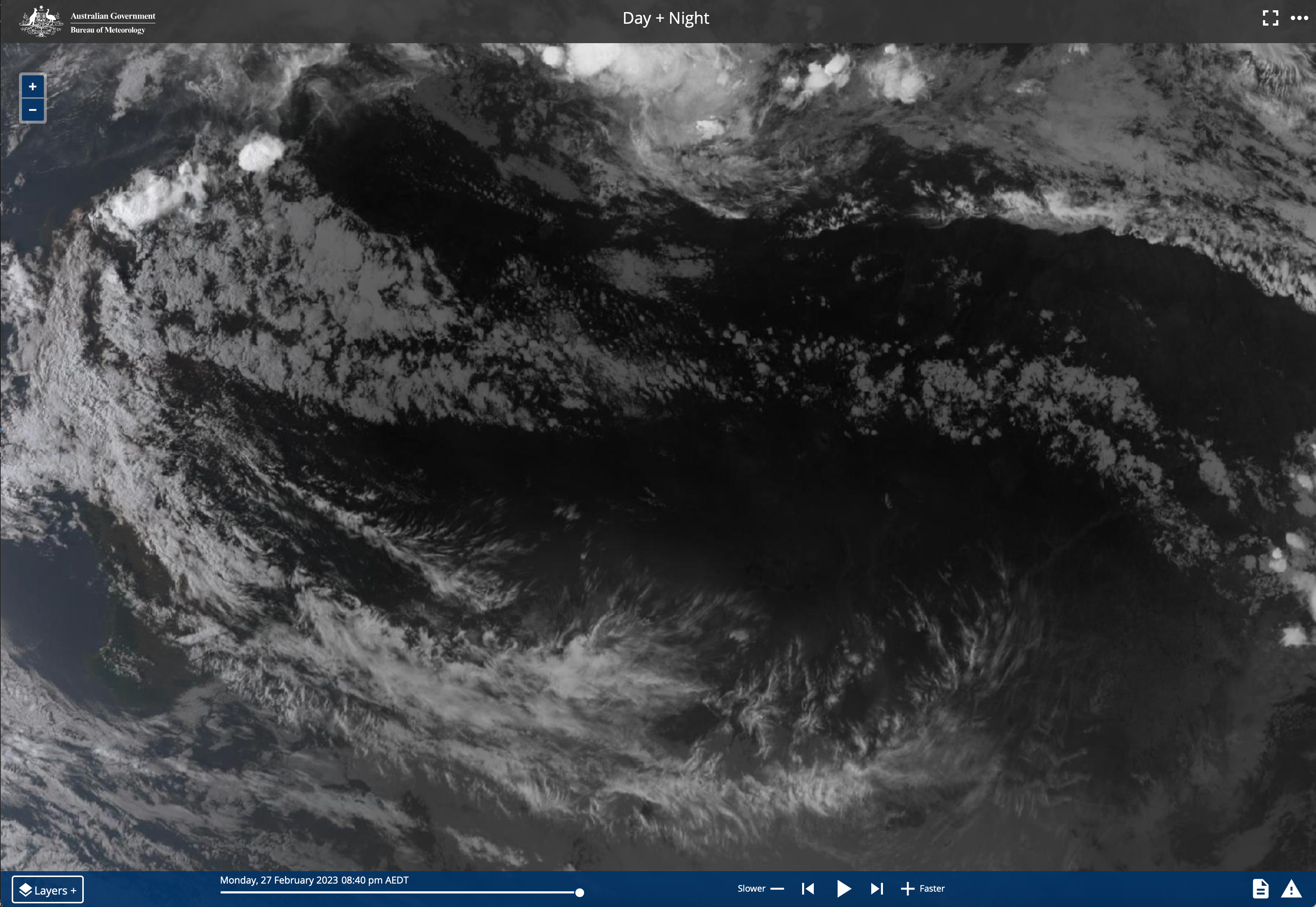

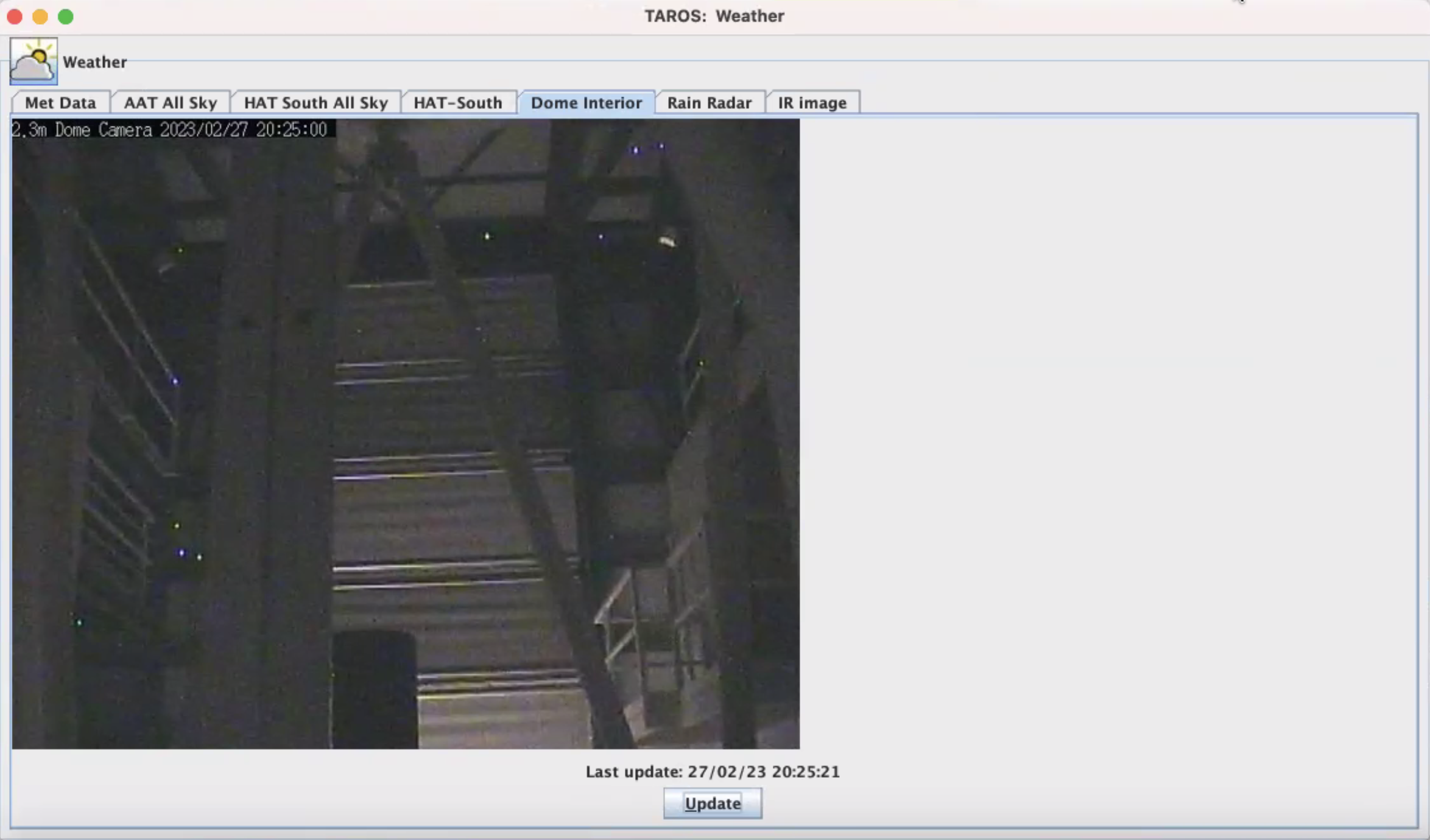

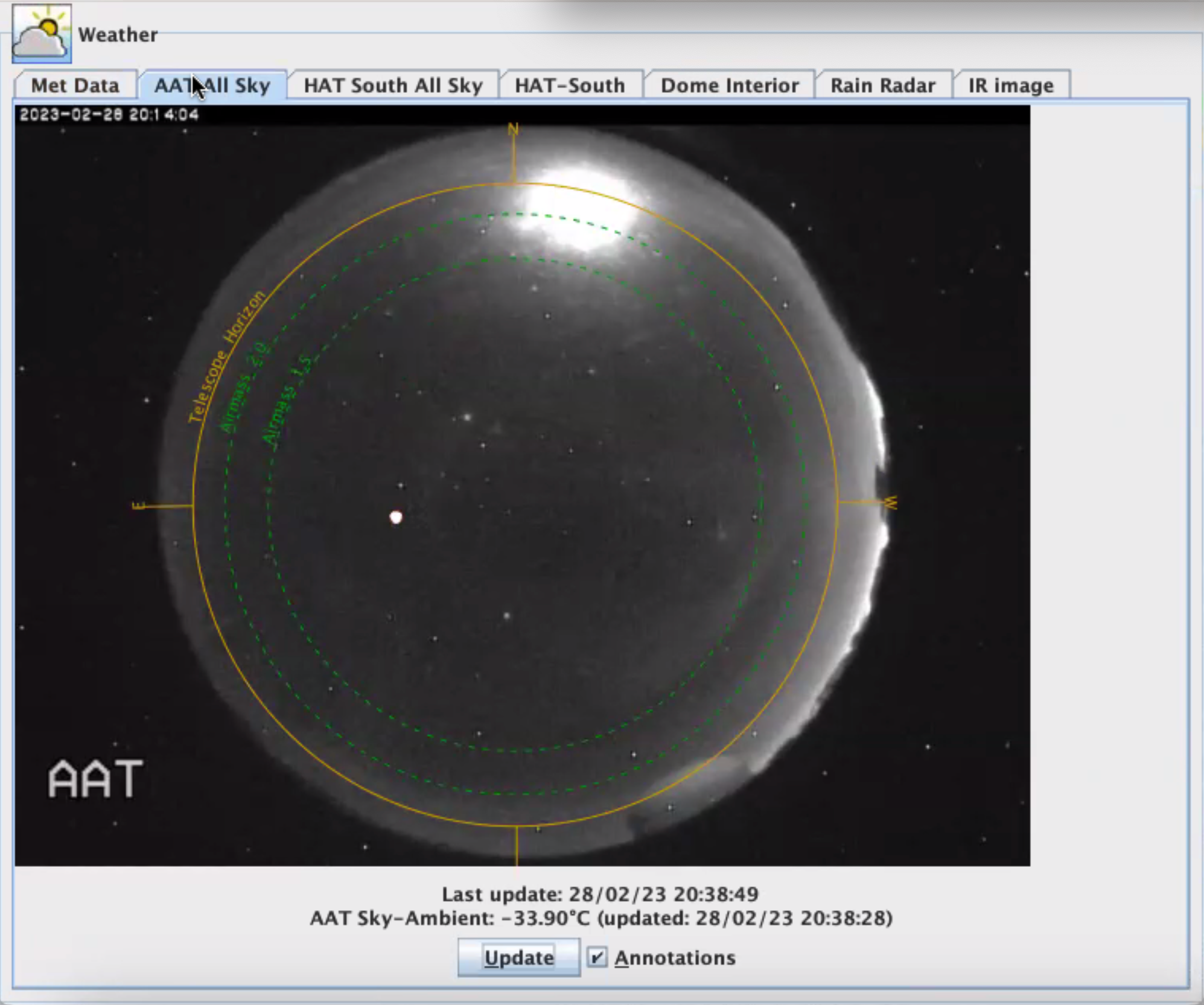

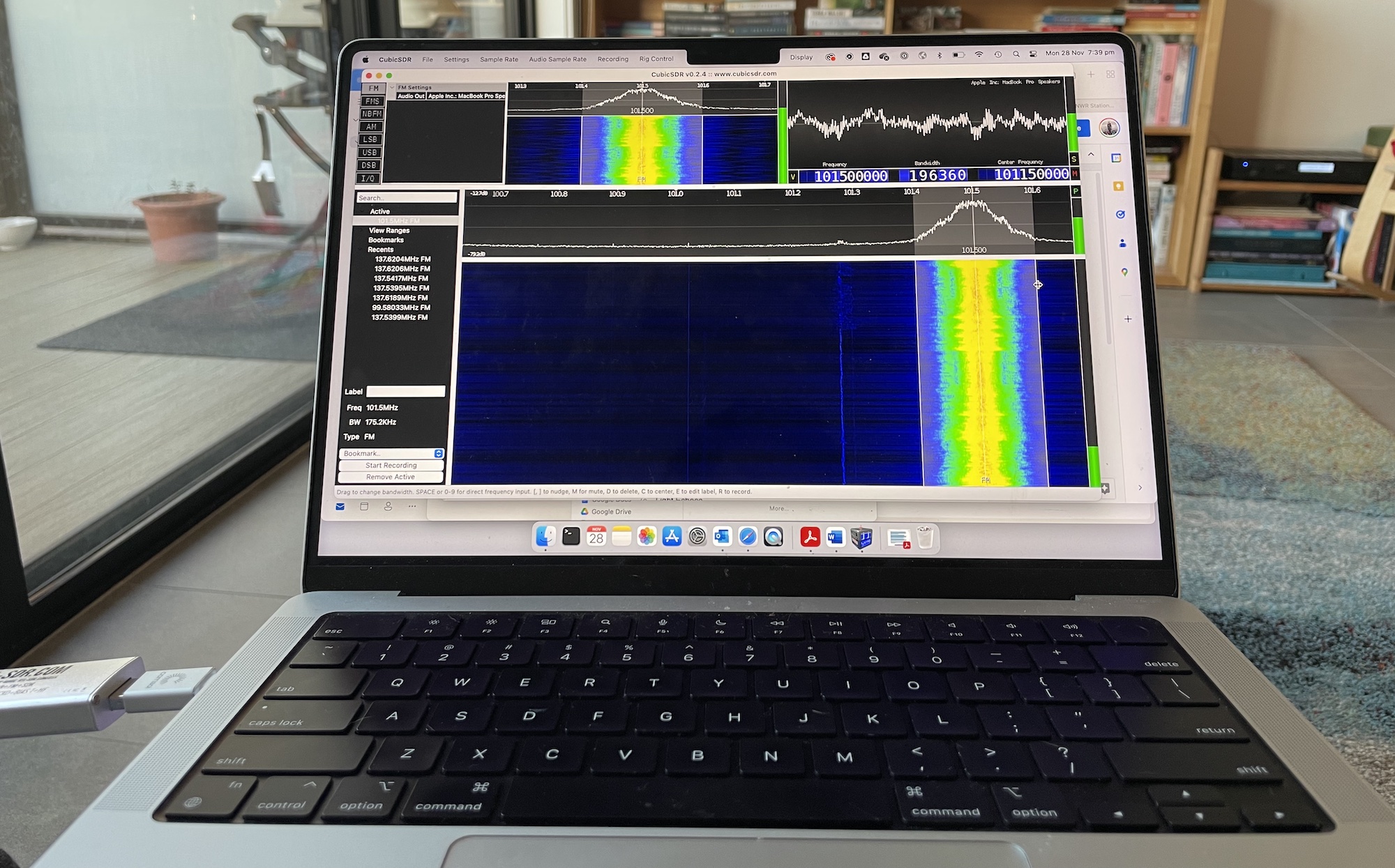

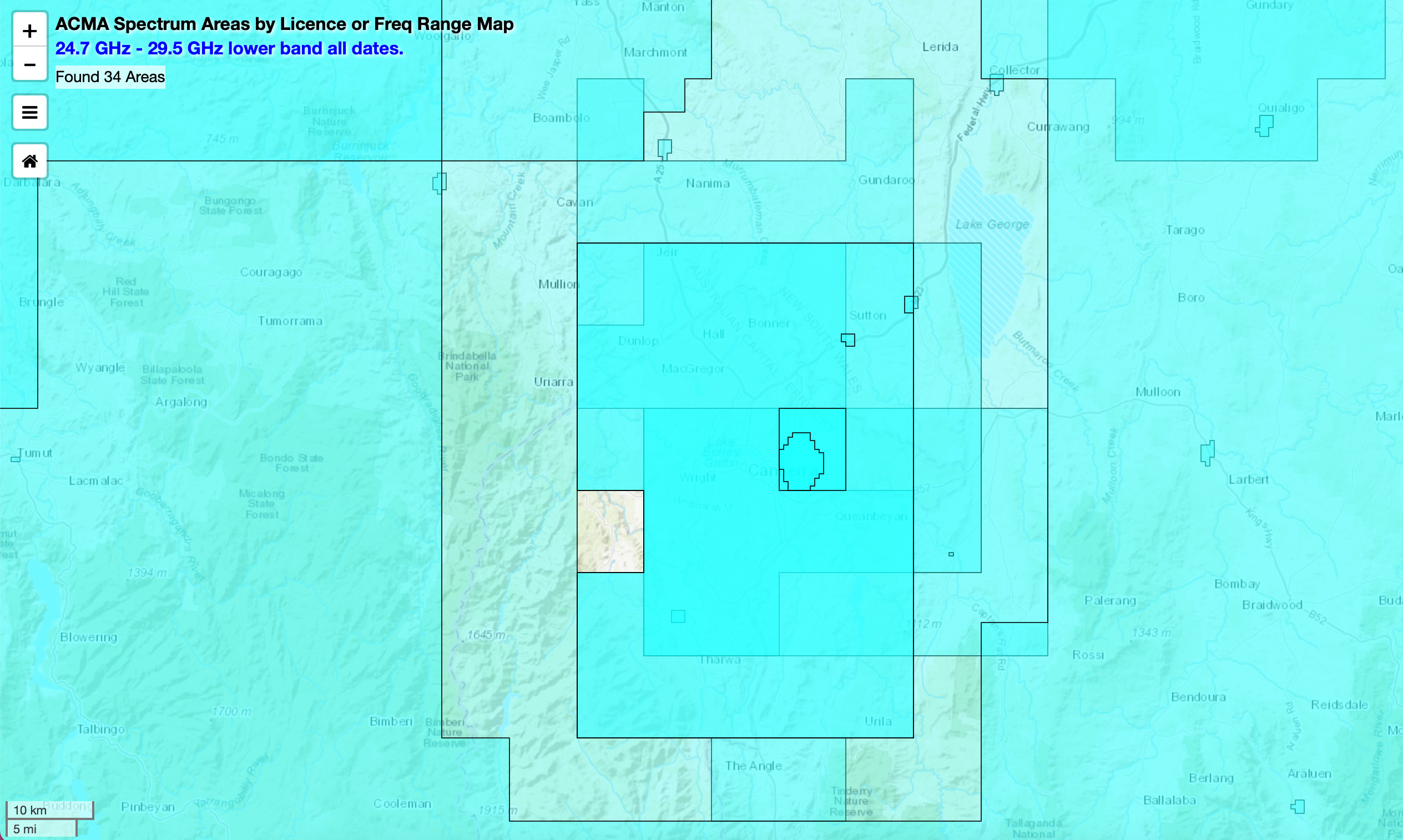

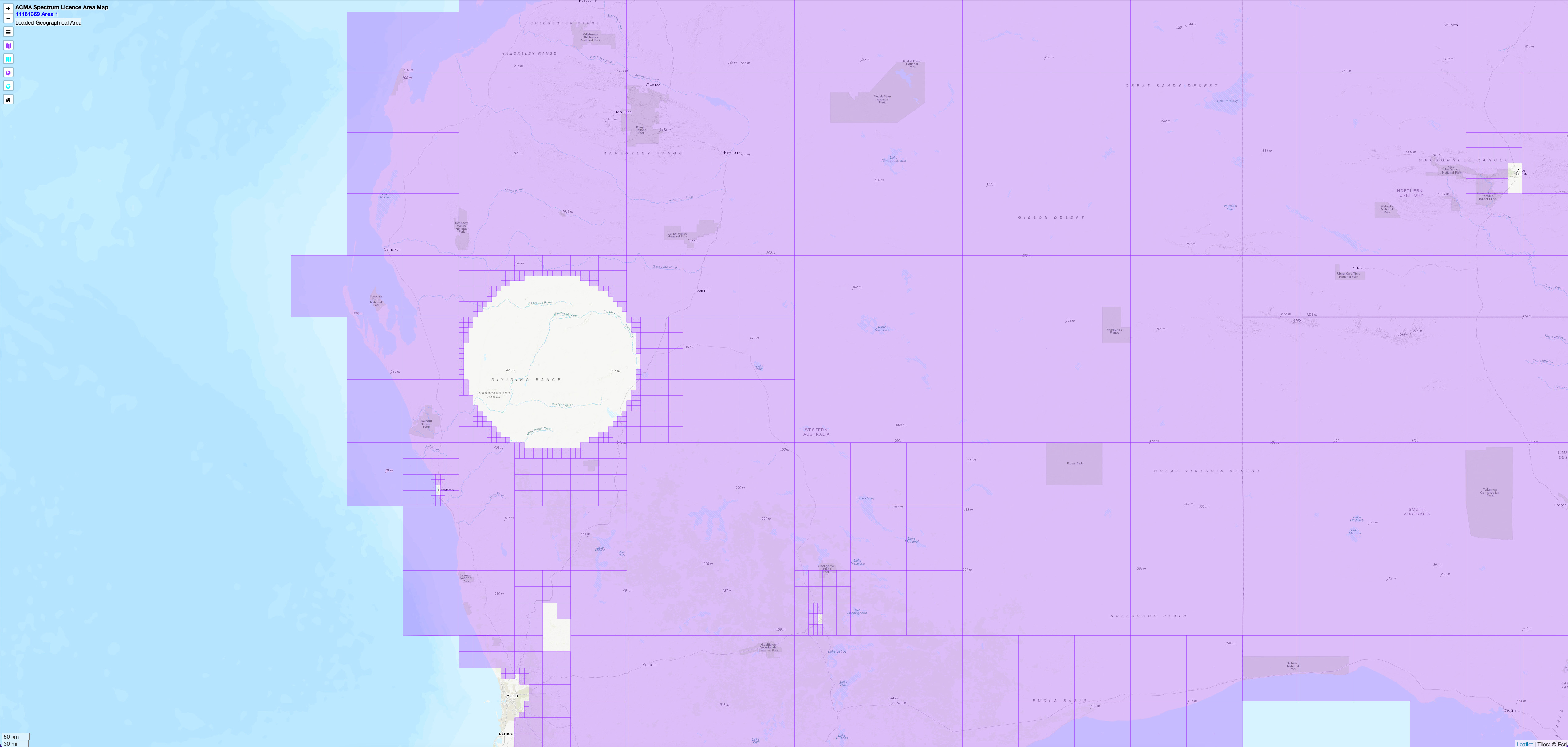

On the first night, we began by looking at the weather and rain radars for Siding Spring Observatory, Coonabarabran, in the Warrumbungles Dark Sky Park where the 2.3 metre telescope is located. This telescope is completely remotely operated (and about to become completely automated – not even operated by observers), and the entire building rotates as the telescope moves (!!).

Images: https://rsaa.anu.edu.au/observatories/telescopes/anu-23m-telescope

The radar showed a few rain cells around the area, one in particular heading towards the telescope. Rain on the telescope can not happen, so observing is a no go if there is a chance of rain within 10km.

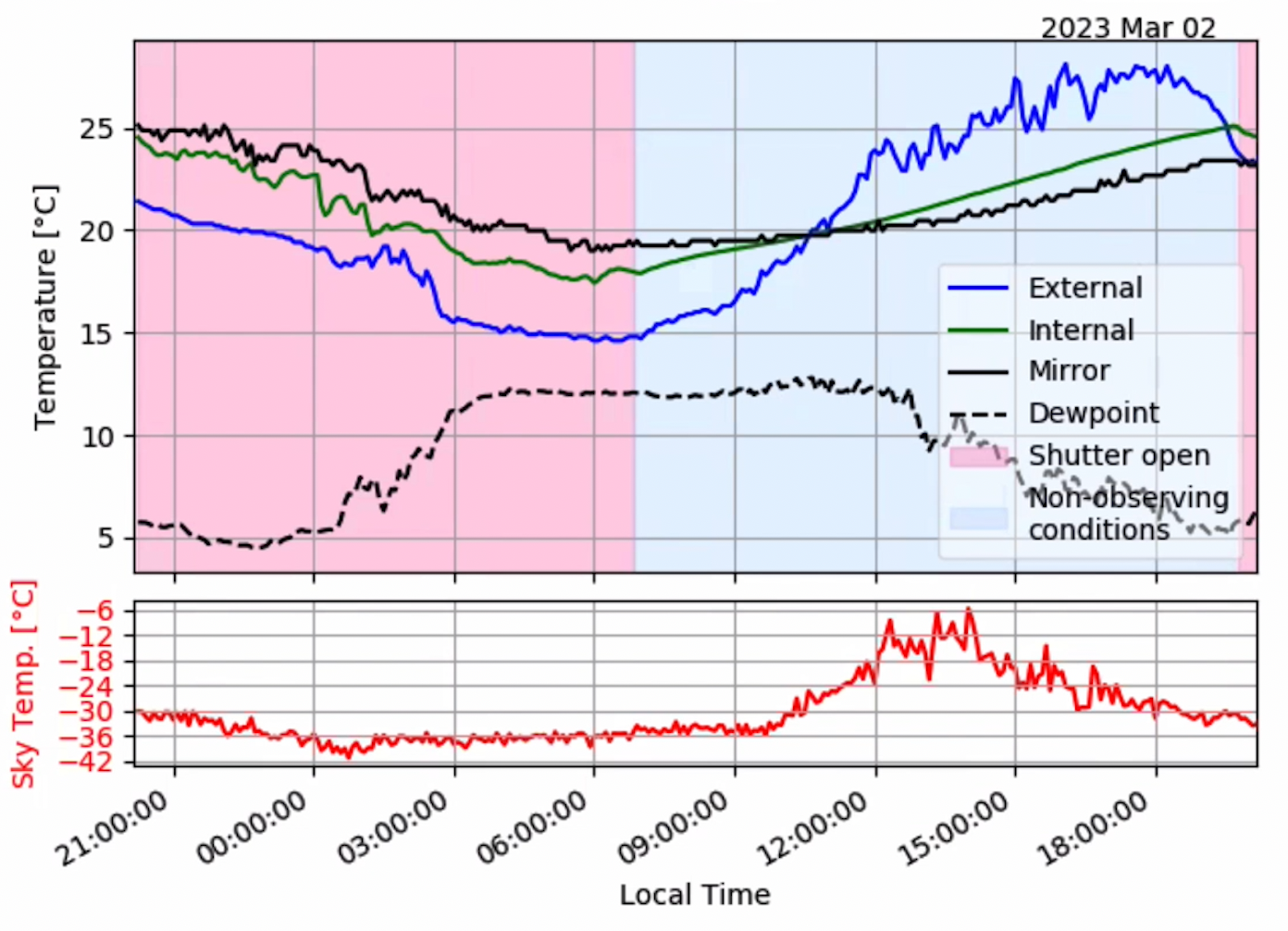

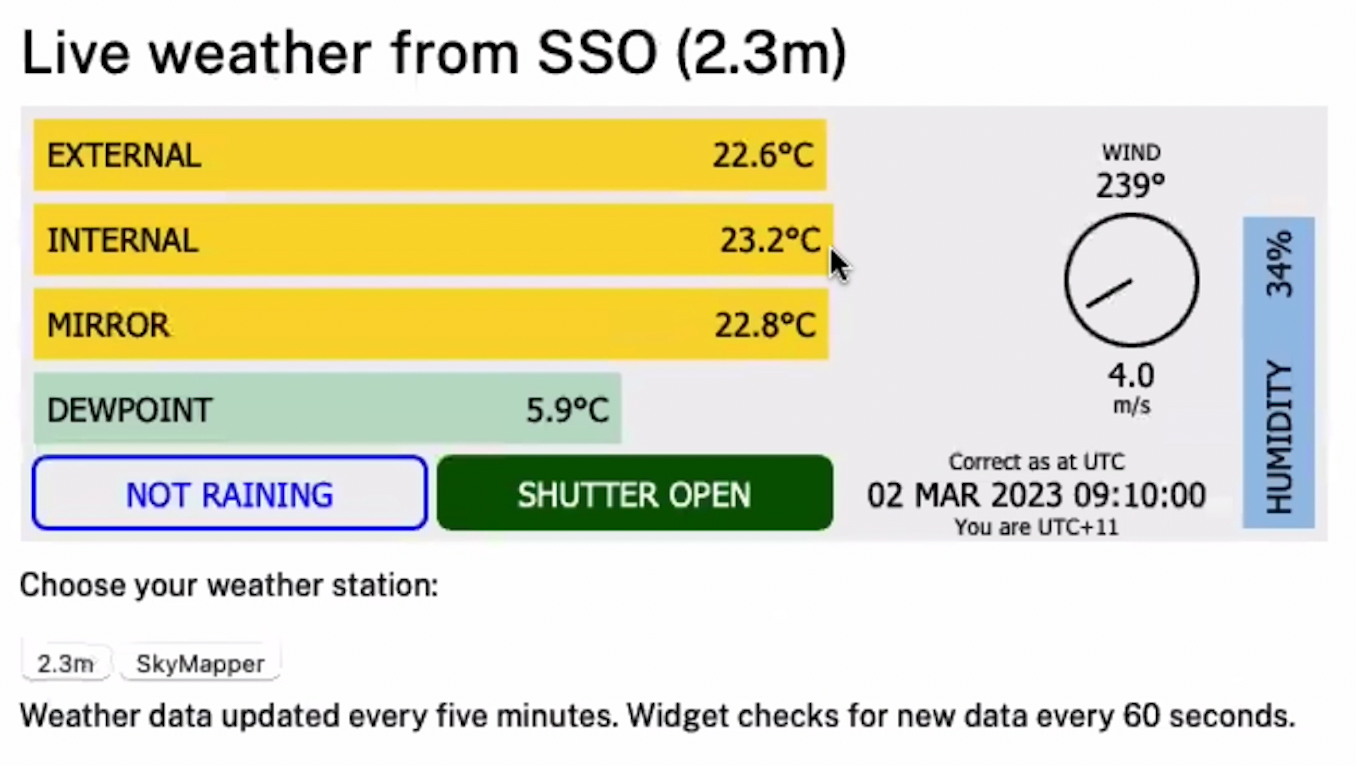

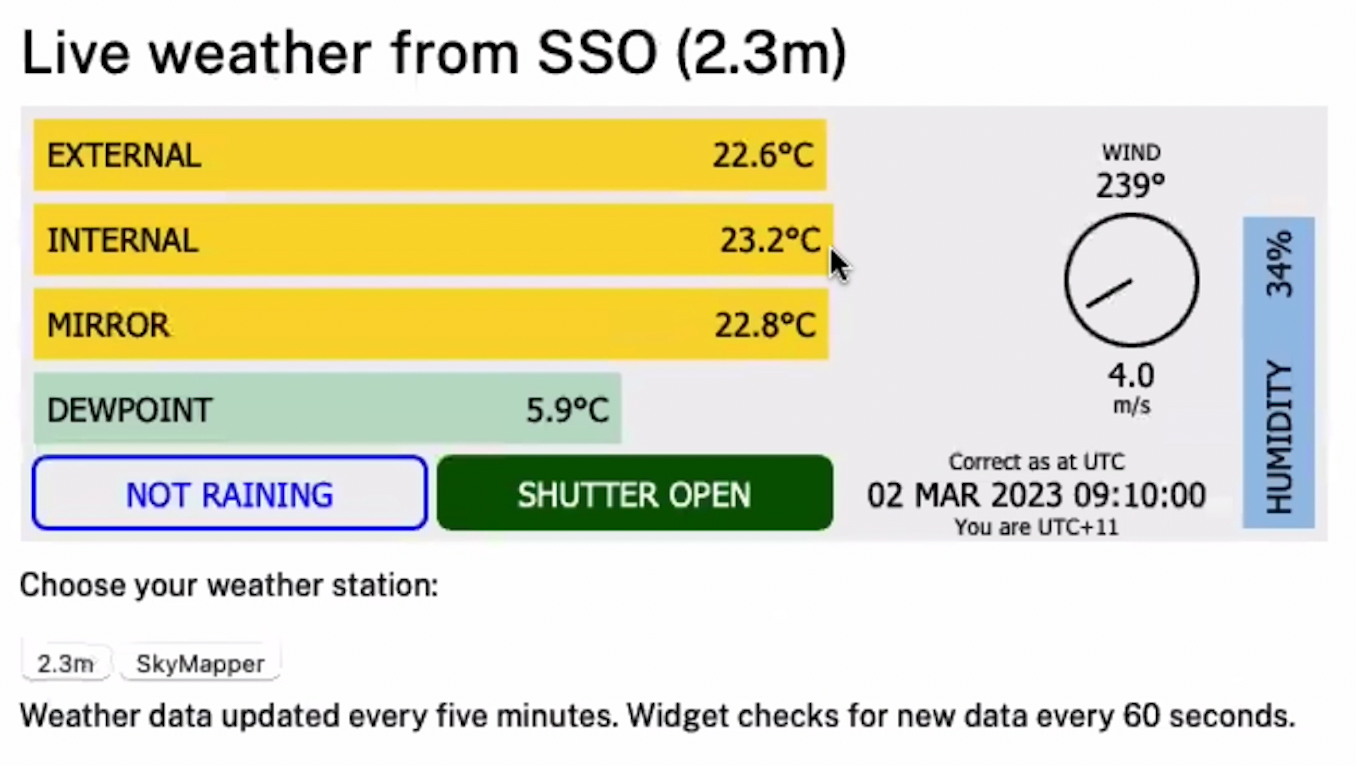

There are several places to get live meteorological data from SSO, as well as being able to check if the other telescopes on site were open and being used or not. Cross-checking this info with BOM rain radar, sat view, and wind sensors, Doris and Michael decided to hold off opening the telescope until later in the night.

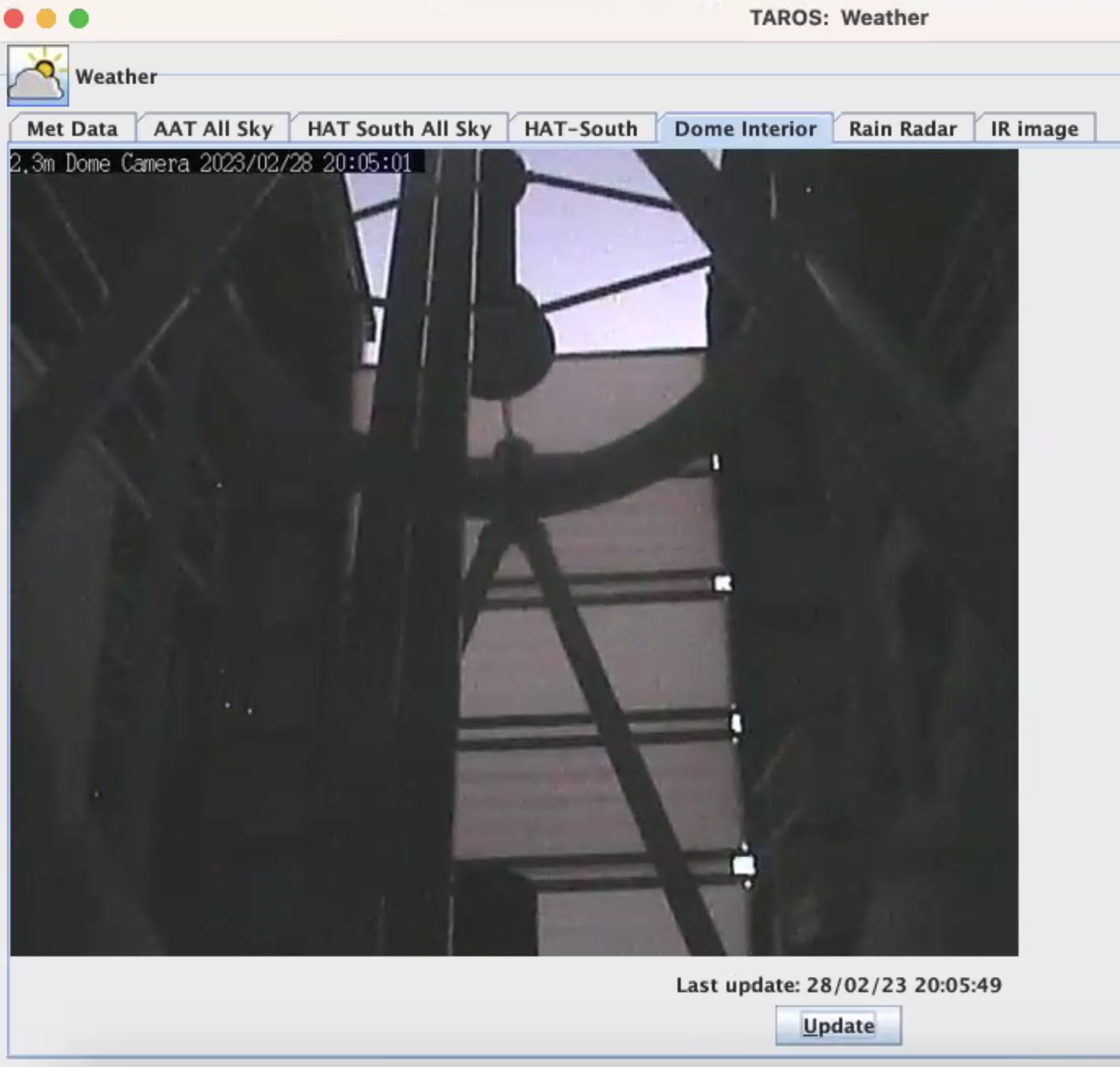

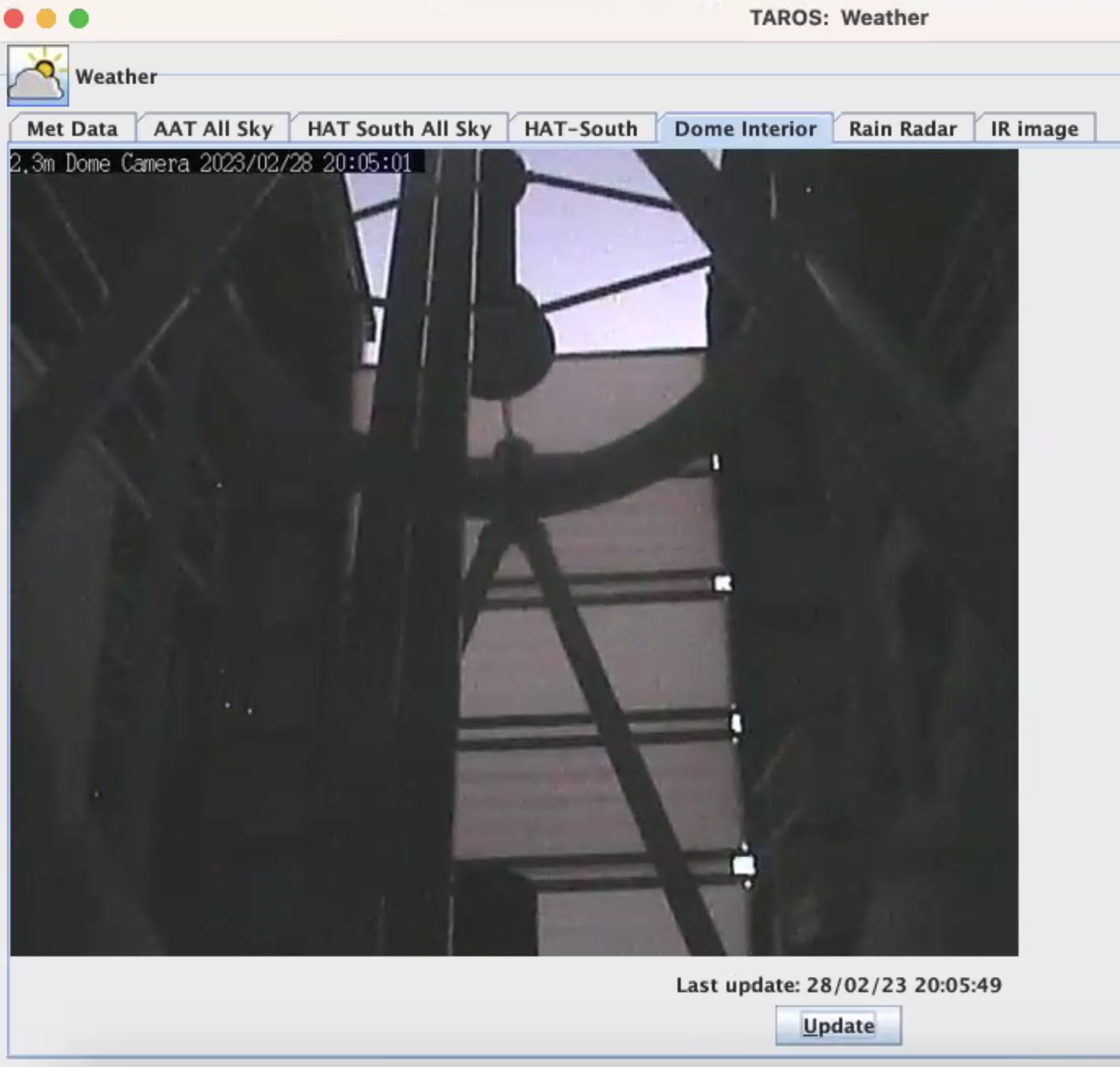

So the only view we got that night was of the inside of the telescope, but it was useful to get an introduction to the graphic user interfaces and to begin to understand the system – not just technically but also of humans and environmental factors that need to align to make this research happen.

Night 2

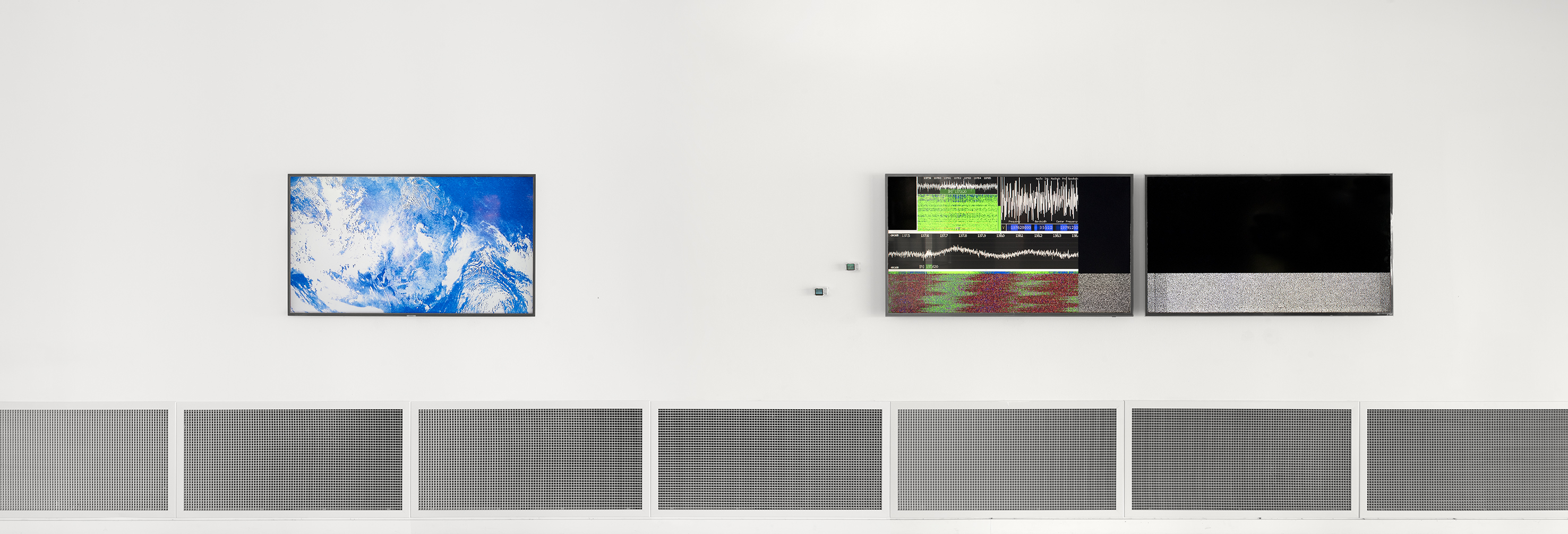

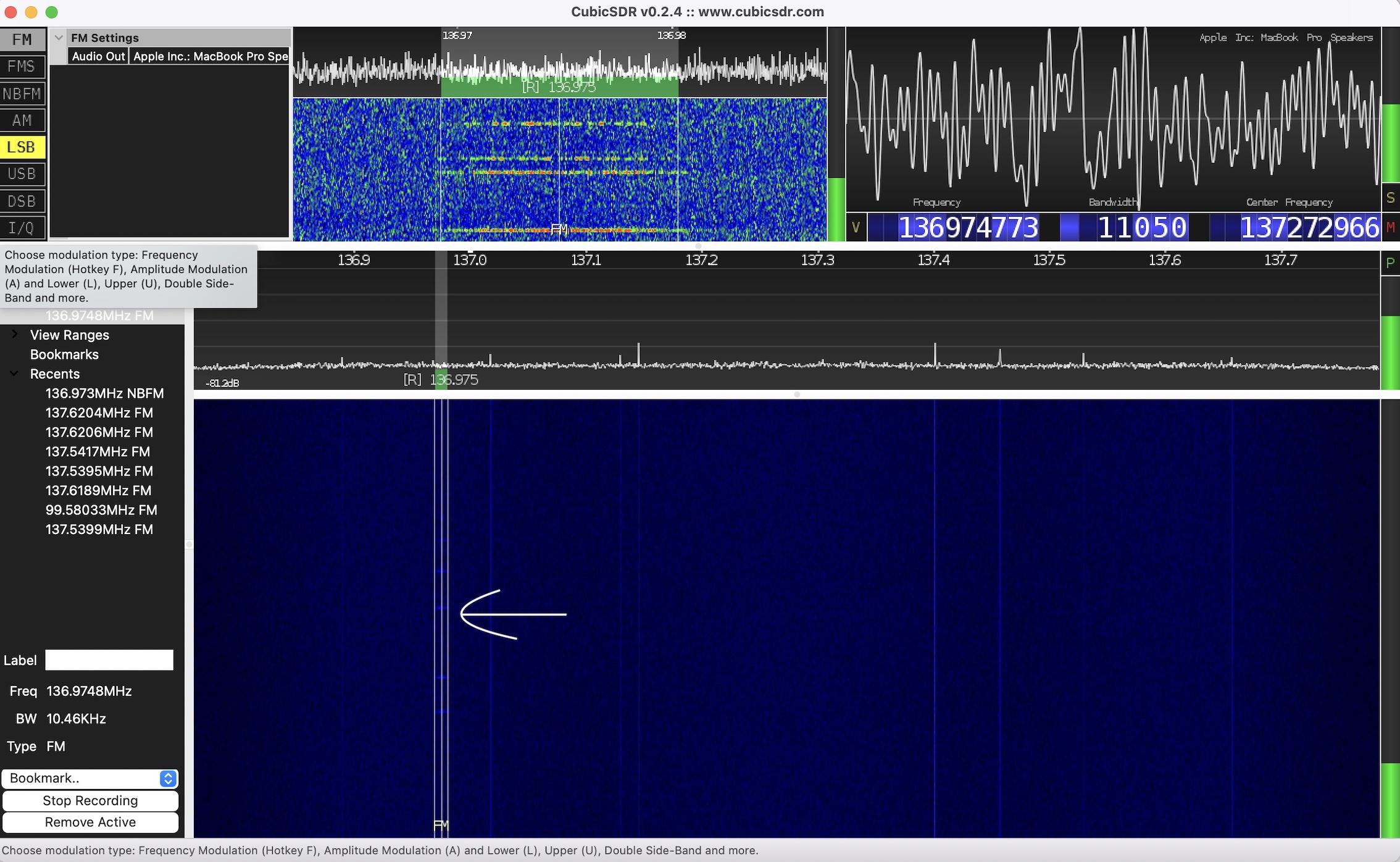

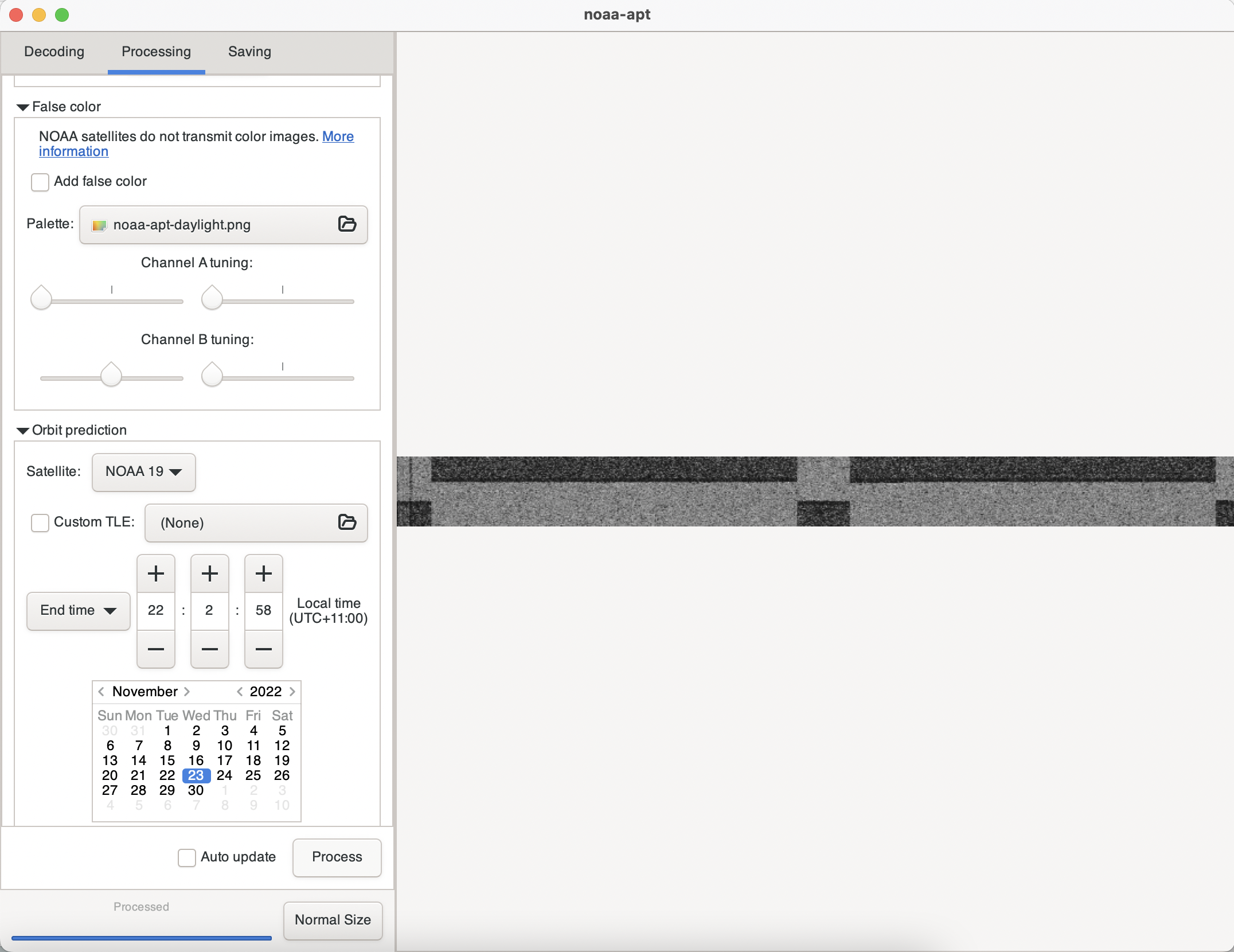

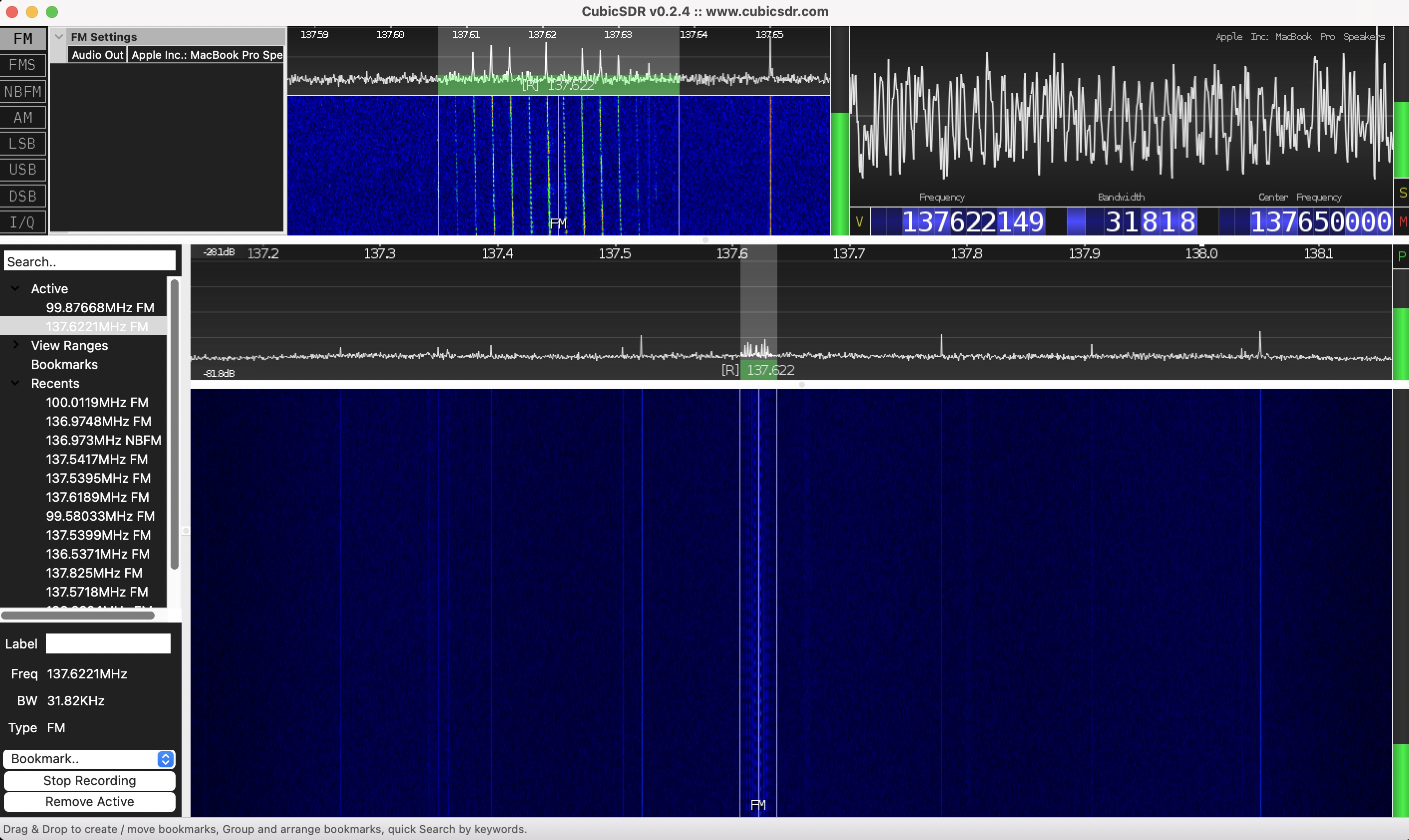

The second night was go! This was a clear night with no hesitation about the weather. After the software system is set up, the dome shutter is opened first.

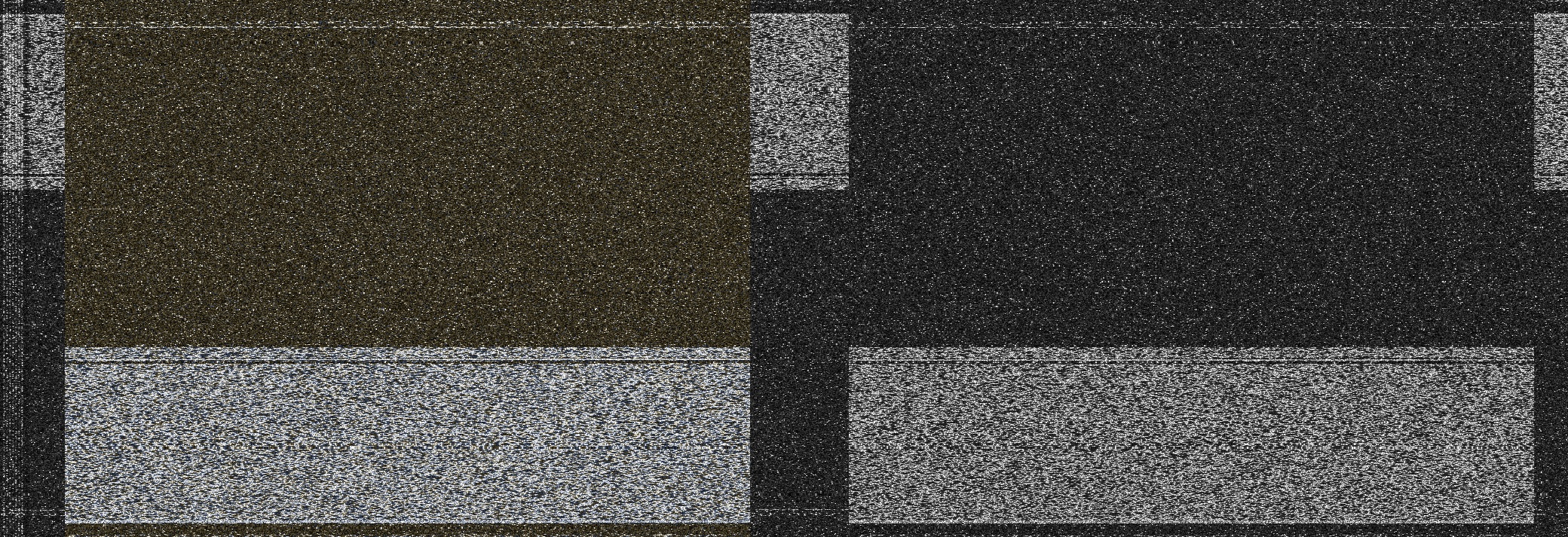

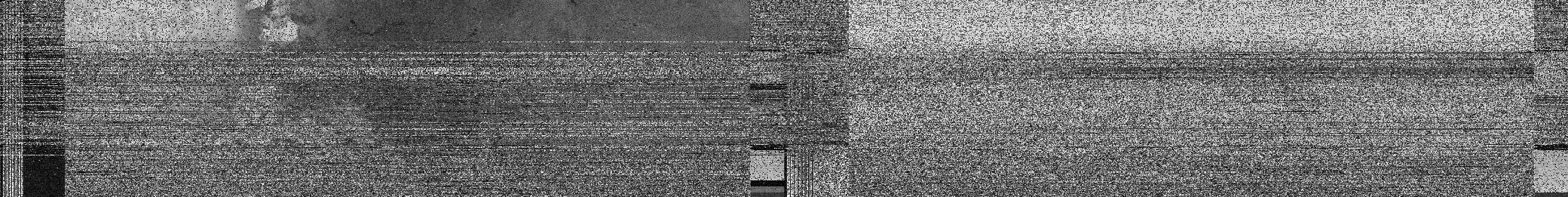

After the dome shutter, the mirror covers can be opened, and this process can be seen on the screen with the sky and pupil image emerging as the telescope opens.

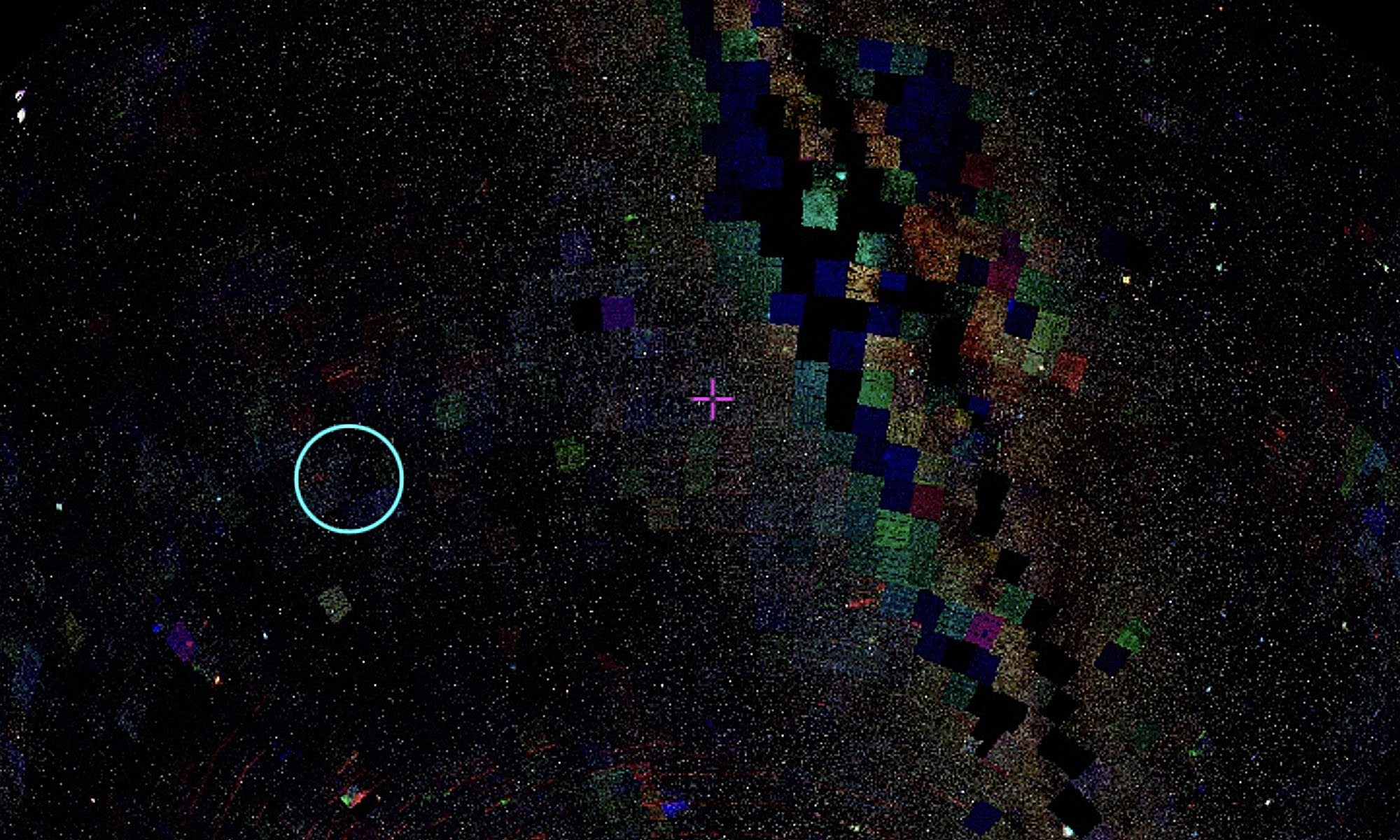

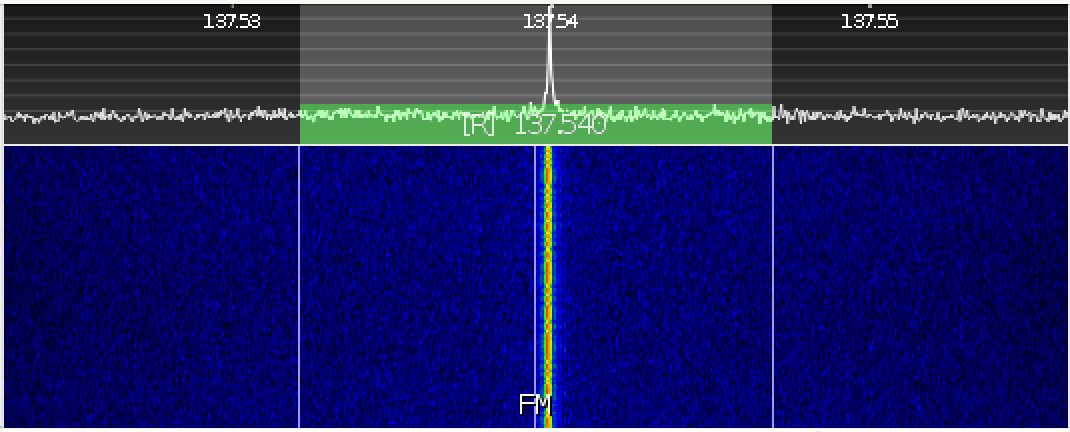

The pupil image is an image of the telescope’s aperture, which is obstructed by the mirror of the telescope held in place by ‘spider vanes’ which appear in clear images as cross hairs around the small circle in the centre.

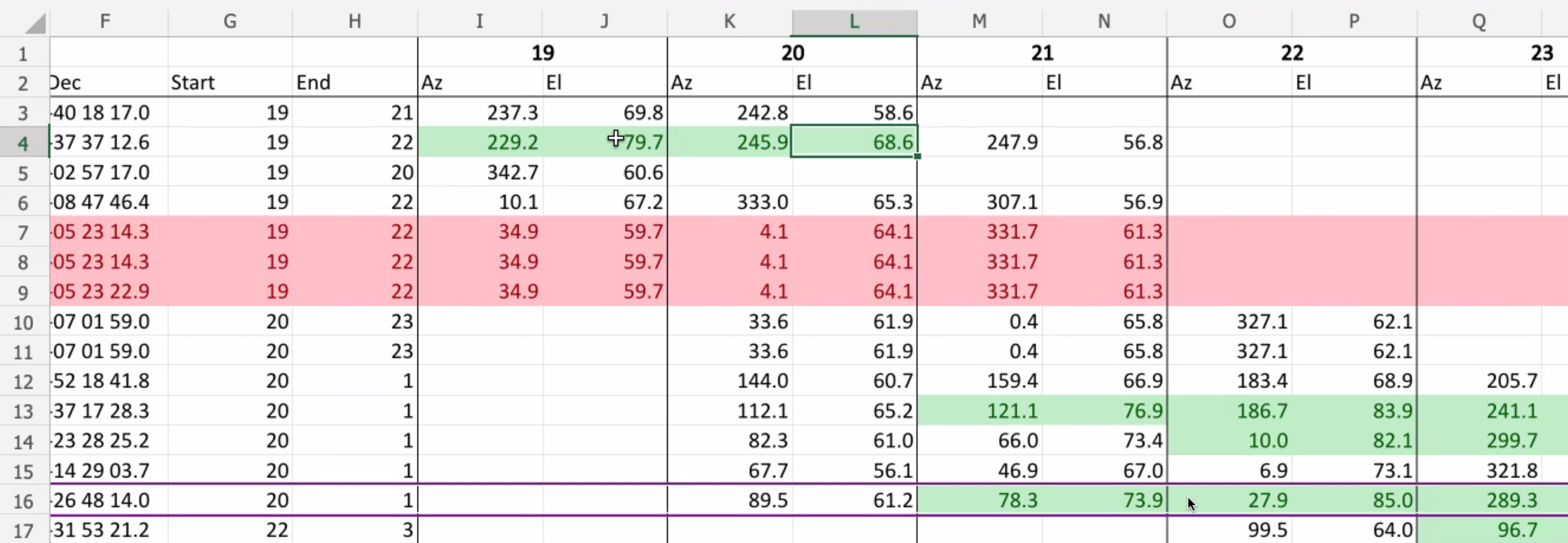

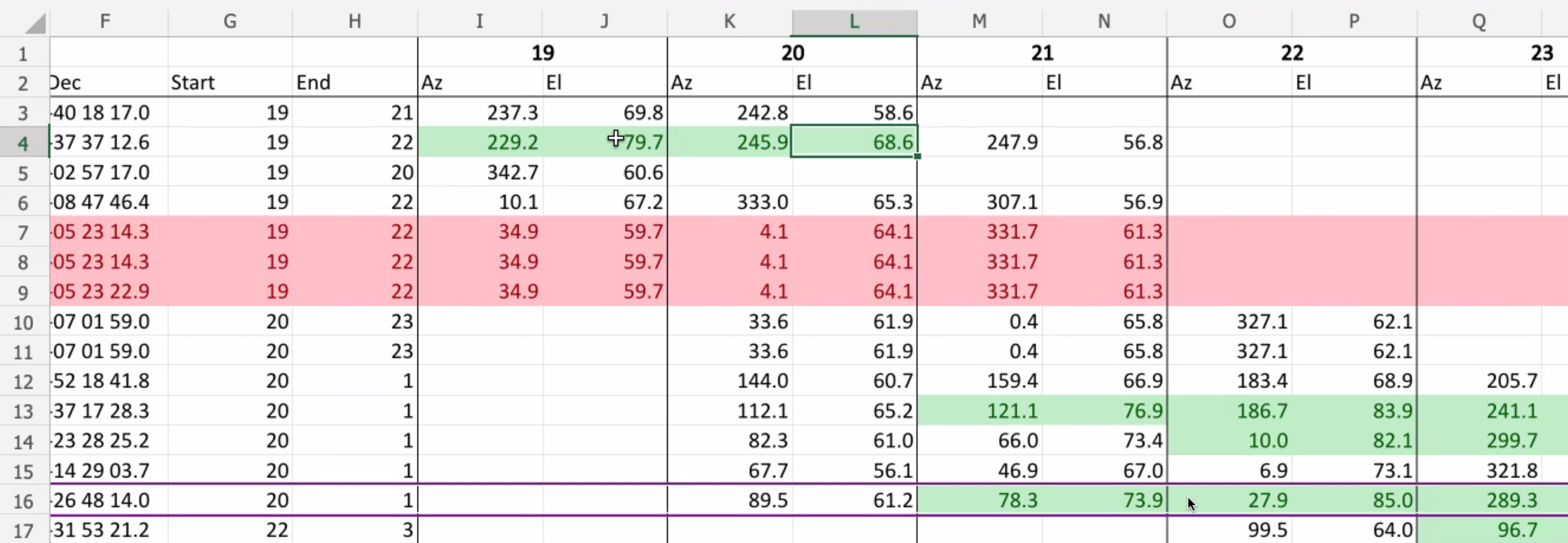

Michael and Doris had a list of double star coordinates to track. After tracking to the first double star, a different viewing window shows the acquisition image, which looks more like normal stars. At first, the stars were on an angle, so a position offset function was carried out to align them in a vertical orientation.

Positioning offset (sped up)

The stars are recorded in 10 minute intervals, to collect data that captures changes throughout the night. Over time, this data can compare turbulence throughout the year in response to seasonal change, as well as over El Niño and La Niña cycles.

After recording the first double star for 10 minutes, the telescope was set to move position onto the next set of coordinates. This takes up to a couple of minutes and after that amount of time we noticed it had stopped moving, and had encountered an error.

Like all technological systems, there was a series of steps to try in a specific order, as well as the good old ‘turn off then on again’ trick. We spoke about other problems that have occurred, and the technical staff whose number you can call who can drive up and manually shut down the system. Luckily it didn’t come to that this time!

Night 3

The third night was clearer again, with the system set up and running quickly and smoothly.

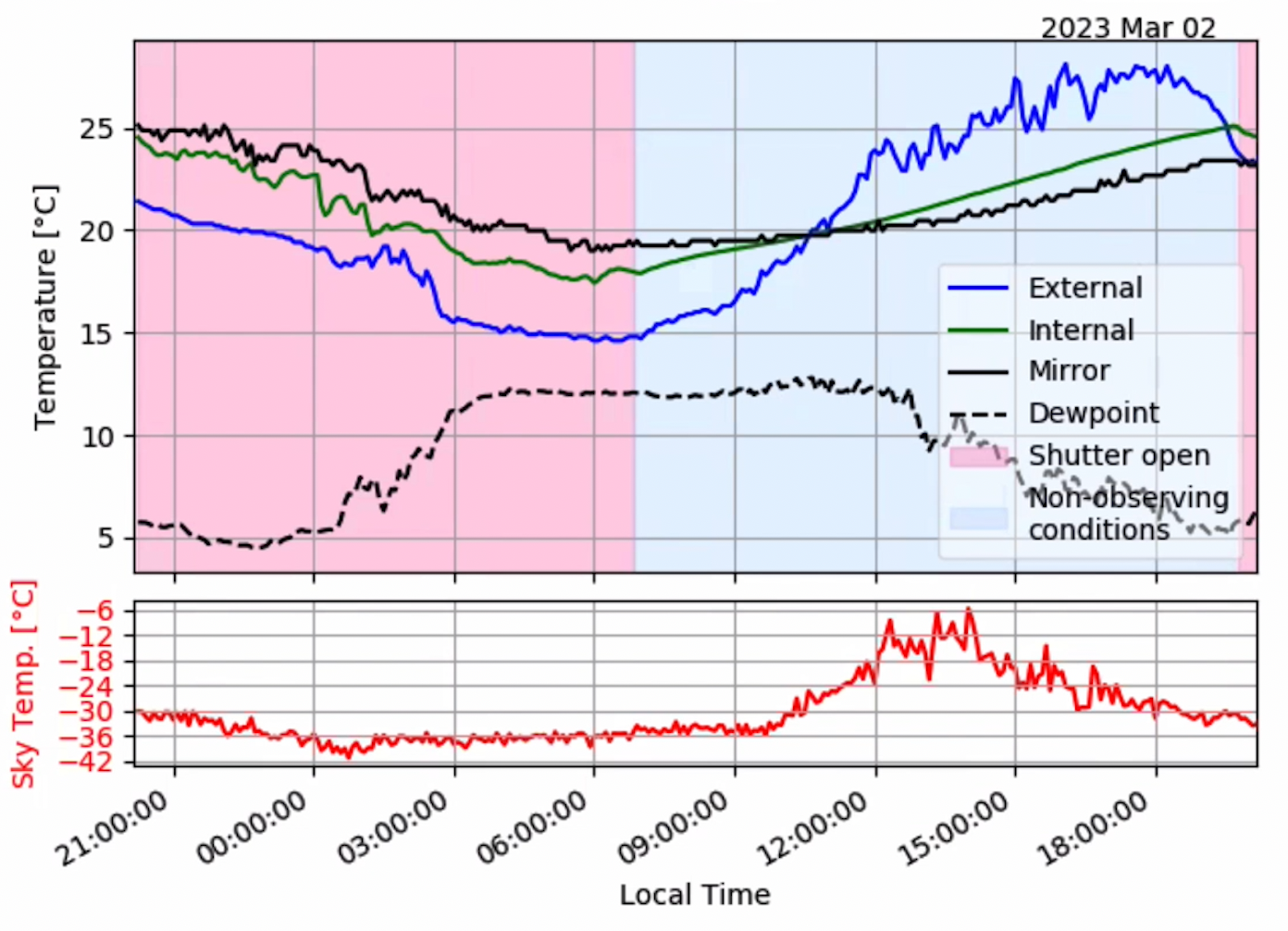

We went through the same process as the previous evening, and could see from the data various temperature changes and the use of the telescope for the previous 24 hours. The pink section indicates the telescope shutter was open and in use, signalling the previous night’s work. We could also see the temperature difference between outside and inside the telescope, and at the mirror surface, was relatively low, which indicates good conditions.

This gave a nice clear view of both the pupil and acquisition images.

Tracking to a double star:

It was incredibly valuable to learn about this intricate and specialised research and experience the process of remote observing. It has given me new appreciation of the depth of knowledge involved at every step, and an insight into the characteristics and aesthetics of the network of humans and machines through which this practice operates.

A huge thank you Doris and Michael for sharing their time, process, and knowledge.

This research described in this blog post is supported by the Commonwealth of Australia as represented by the Defence Science and Technology Group of the Department of Defence.

Broken Hill, NSW.

Broken Hill, NSW.