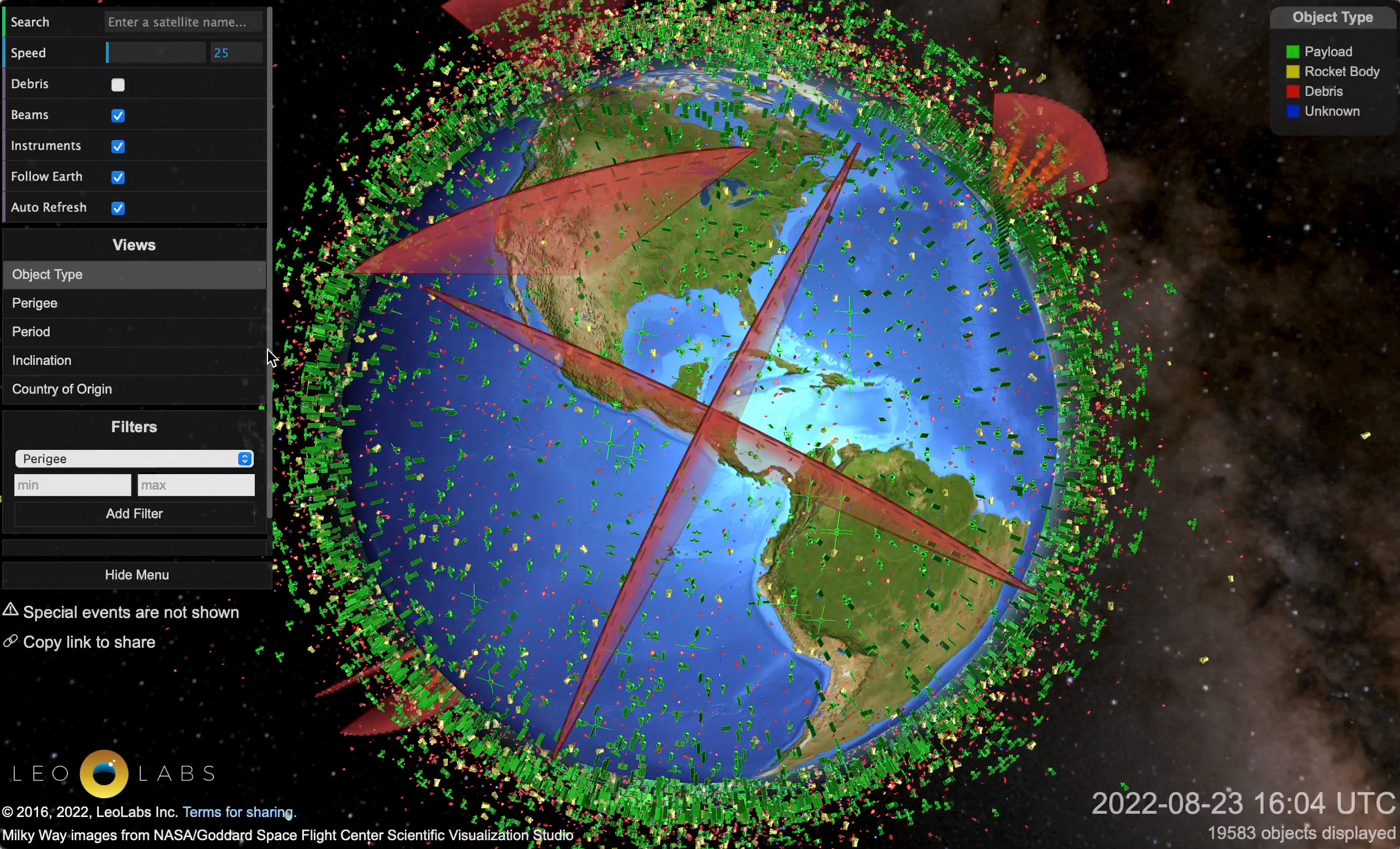

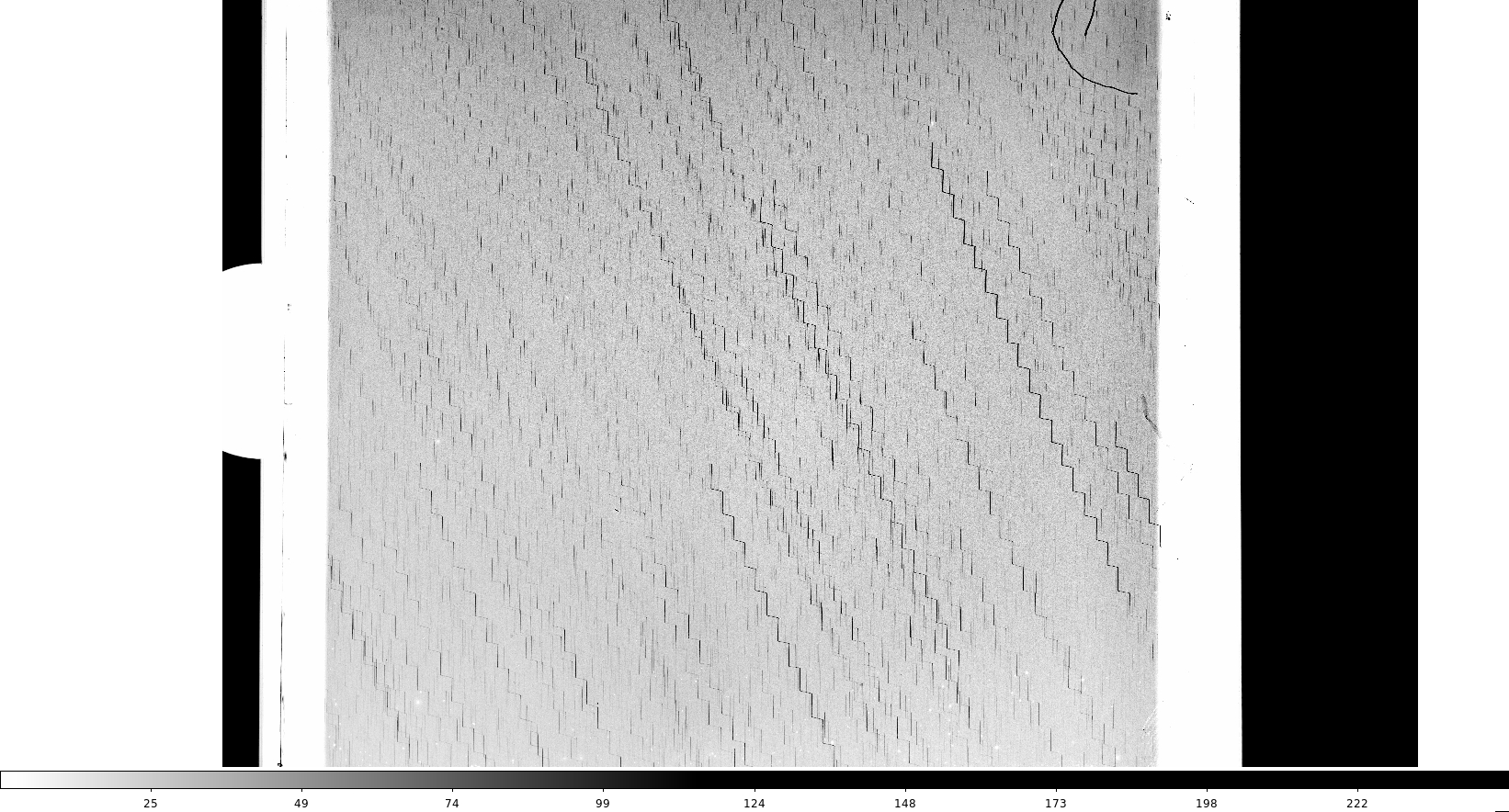

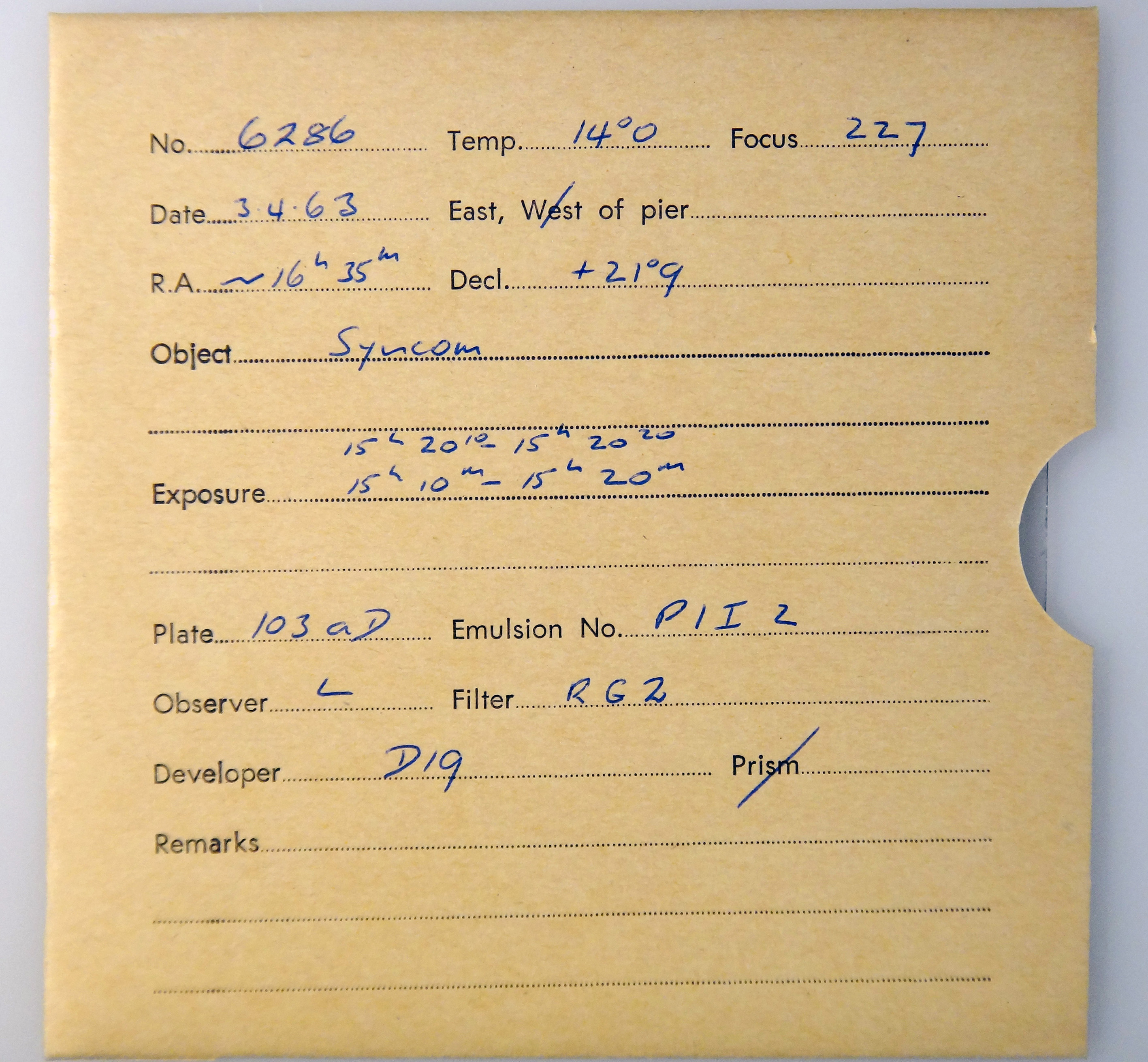

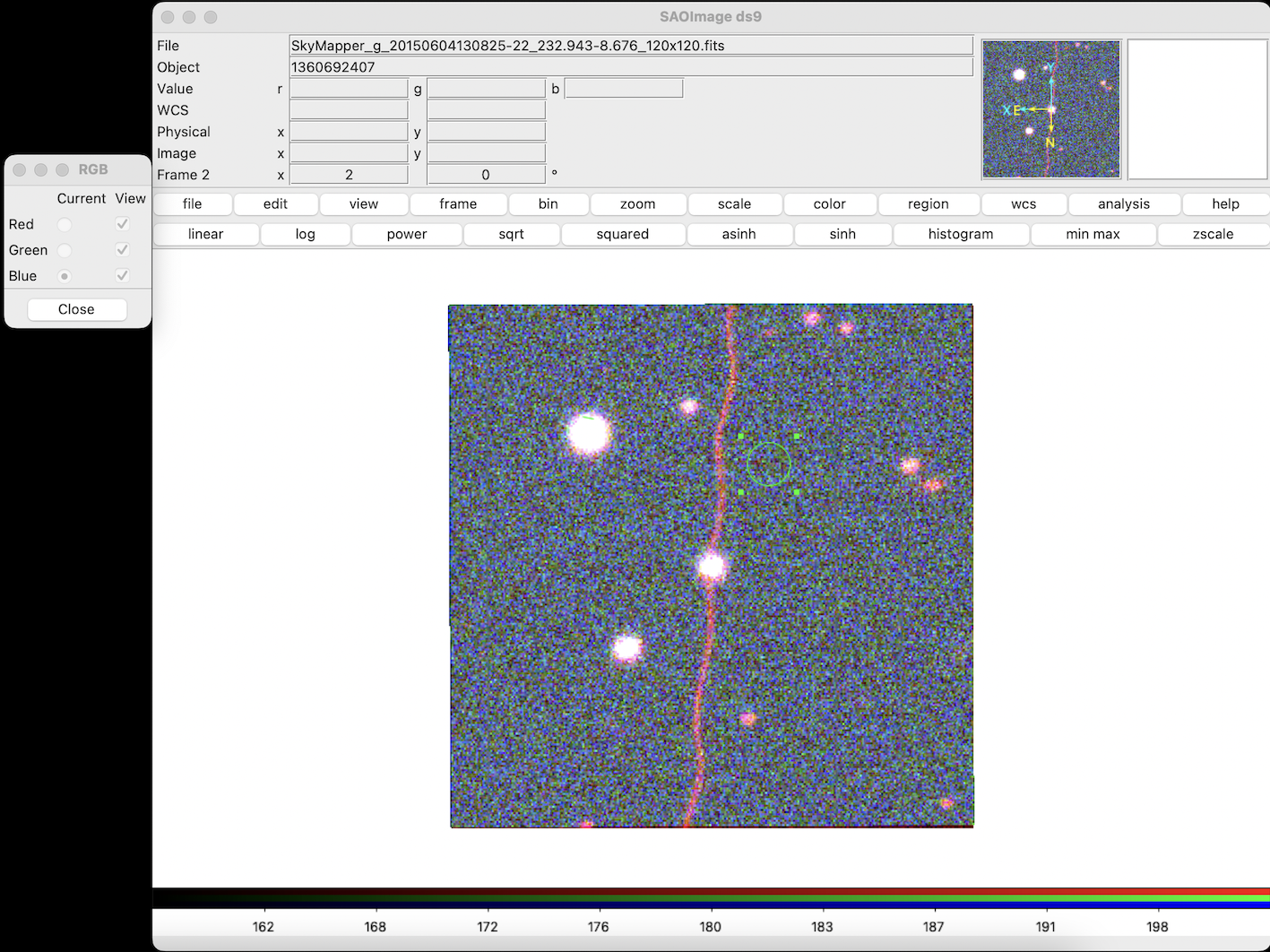

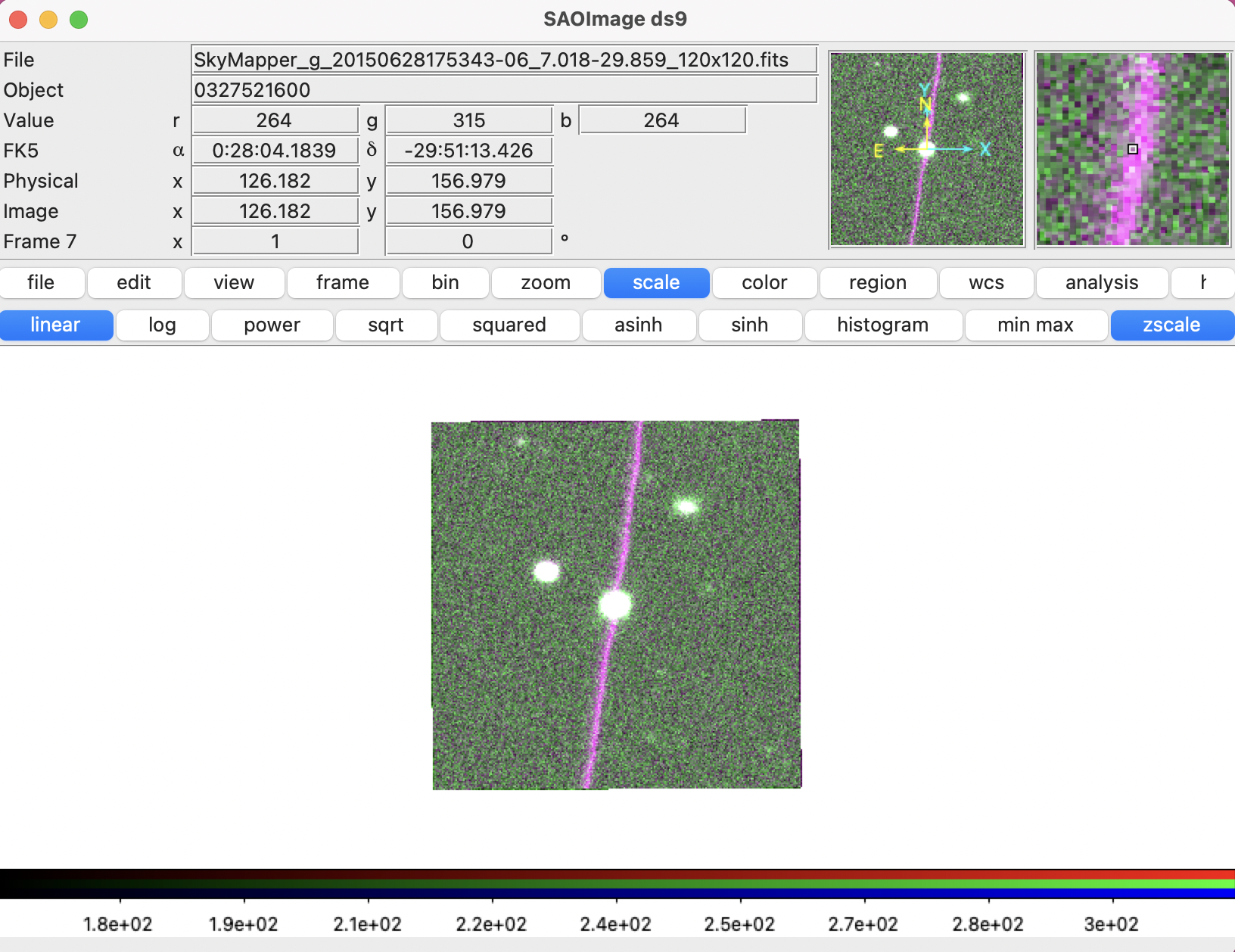

This past week I began using astronomy software SOA ds9 to look at satellite contaminated images from Skymapper, in FITS format.

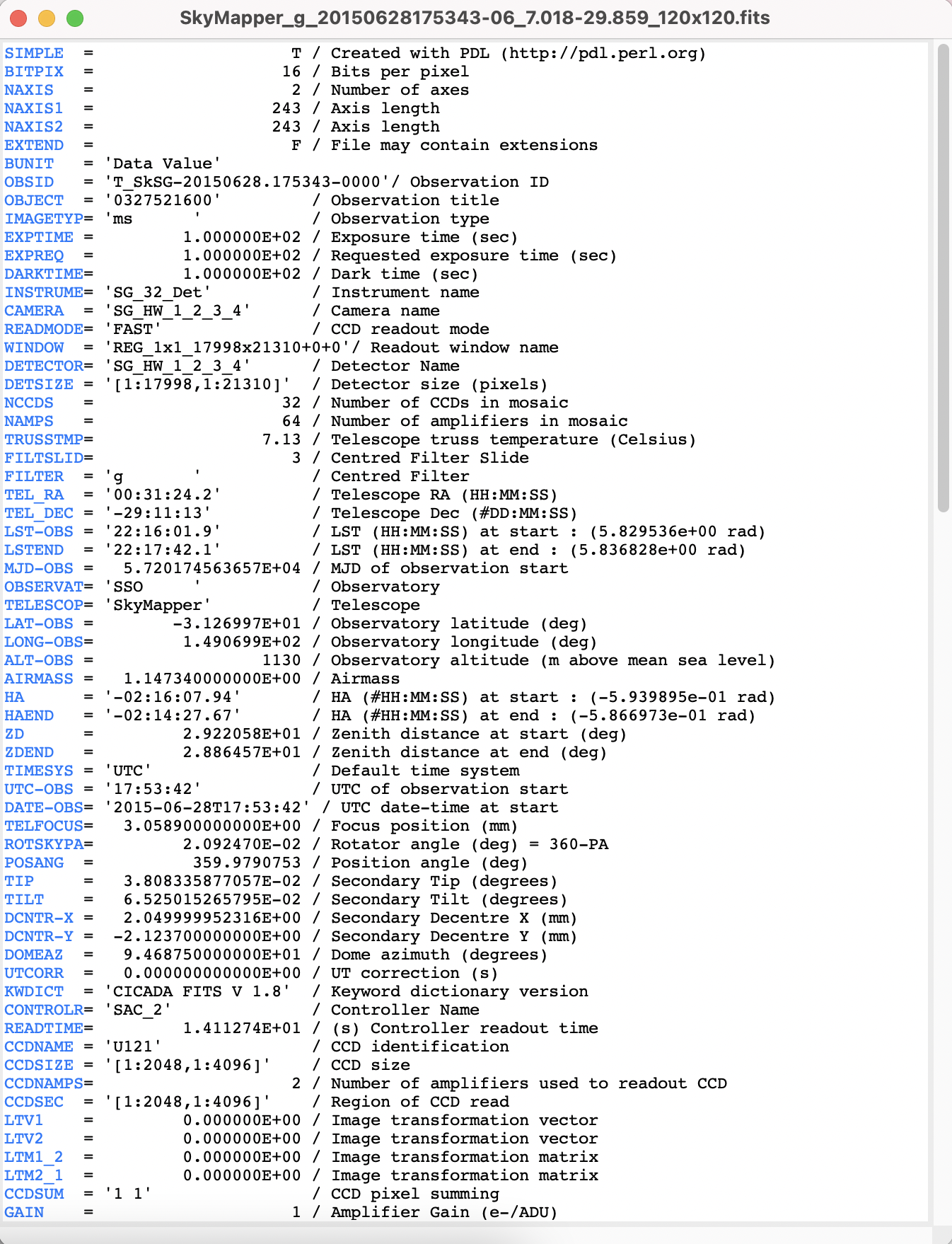

Different from typical image files, FITS (Flexible Image Transport System) is an archival data format that stores information such as spectra, lists, tables, or arrays, instead or as well as ‘images’. SOA ds9 is an application designed for working with image data in this format. The FITS file comes with a ‘Header’ that contains stacks of metadata, here’s an excerpt:

Since astronomical images measure the intensity of light rather than a defined colour, they are grayscale and appear differently dependending on the band of filter used (and frequency captured). Ds9 can open these files and combine them into frames that correlate and/or separate this information.

I have been trying a very basic function of doing this by combining FITS files of the same sky object captured in different bands, into a colour image in RGB channels. This involves layering the red, green, blue, channels together. Sounds simple enough… (twist! It’s not)

I originally charged ahead using the ‘r’ band for red and ‘g’ band for green. When I asked Brad where the ‘blue’ channel was, he explained (reminding me patiently) that it’s spectrum that we’re working with, not colour.

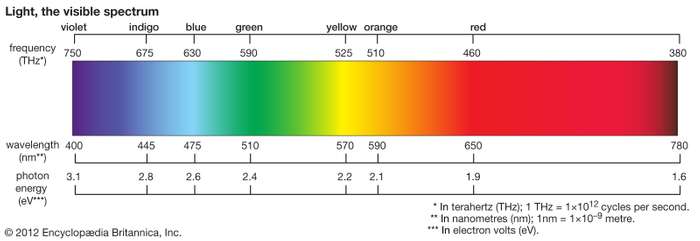

In the light spectrum, what we see as blue has wavelengths between about 450 and 495 nanometers, green from 450 to 570, and red from 620 to 750.

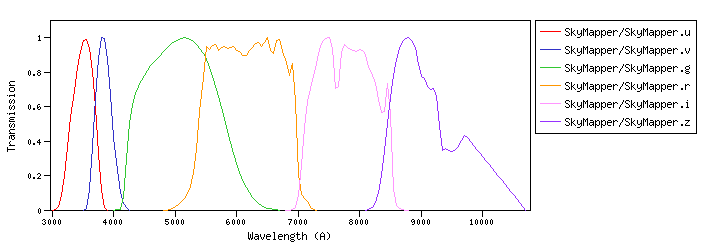

Different telescopes have different filter profiles according to what they are trying to measure. SkyMapper filters are plotted on this graph:

Comparing these graphs was useful for me in thinking through how the different bands are translated to RGB channels due to their wavelengths on the light spectrum.

So when making an RGB image from SkyMapper FITS files in ds9, I used the i band for the red channel, the r band for the green channel, and g band for the blue filter.

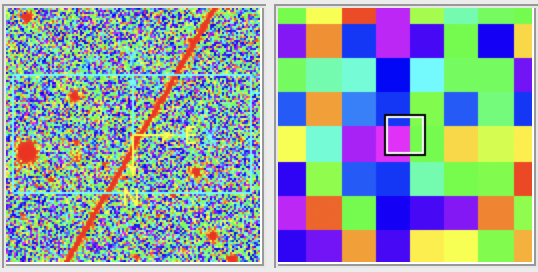

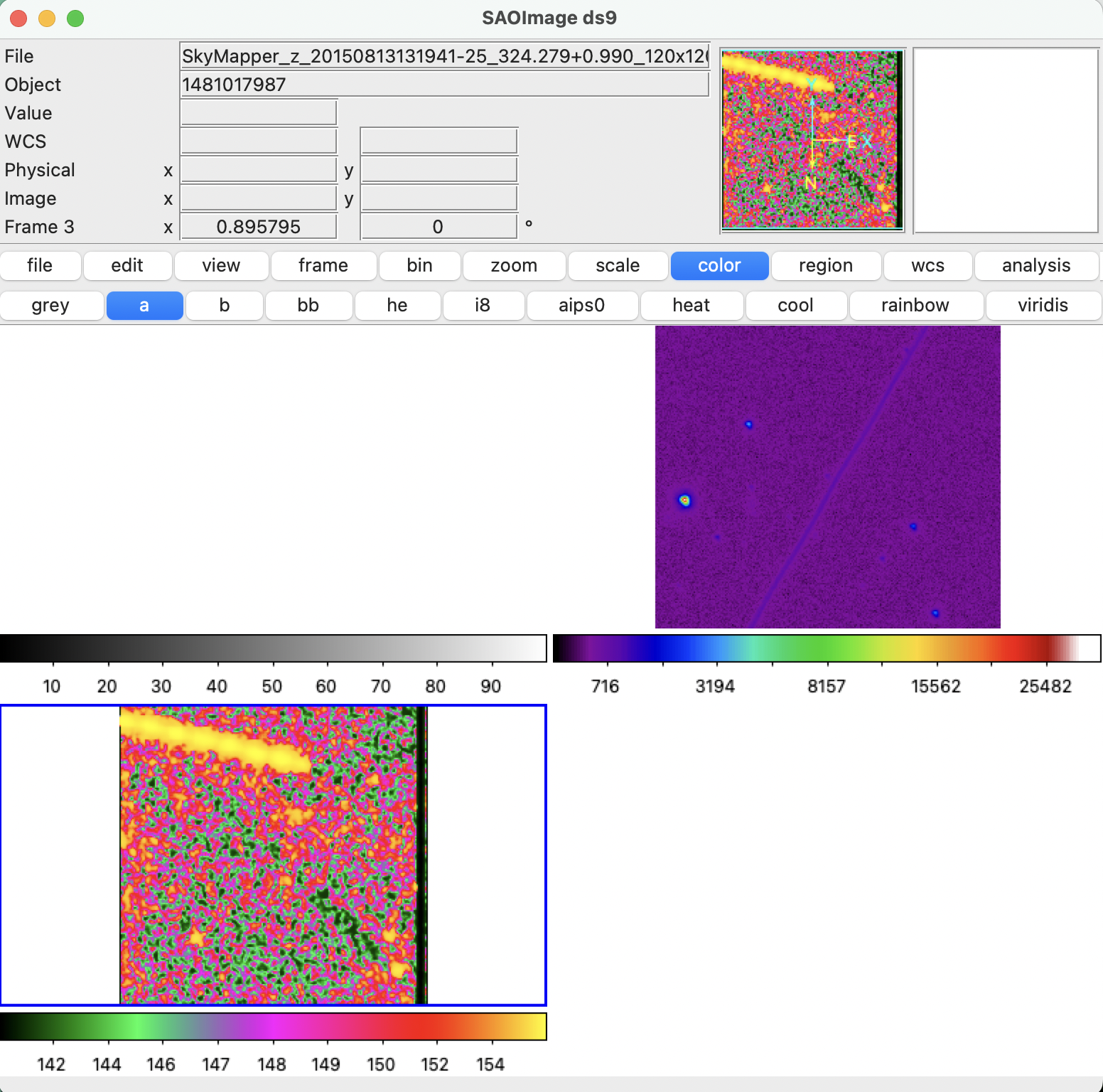

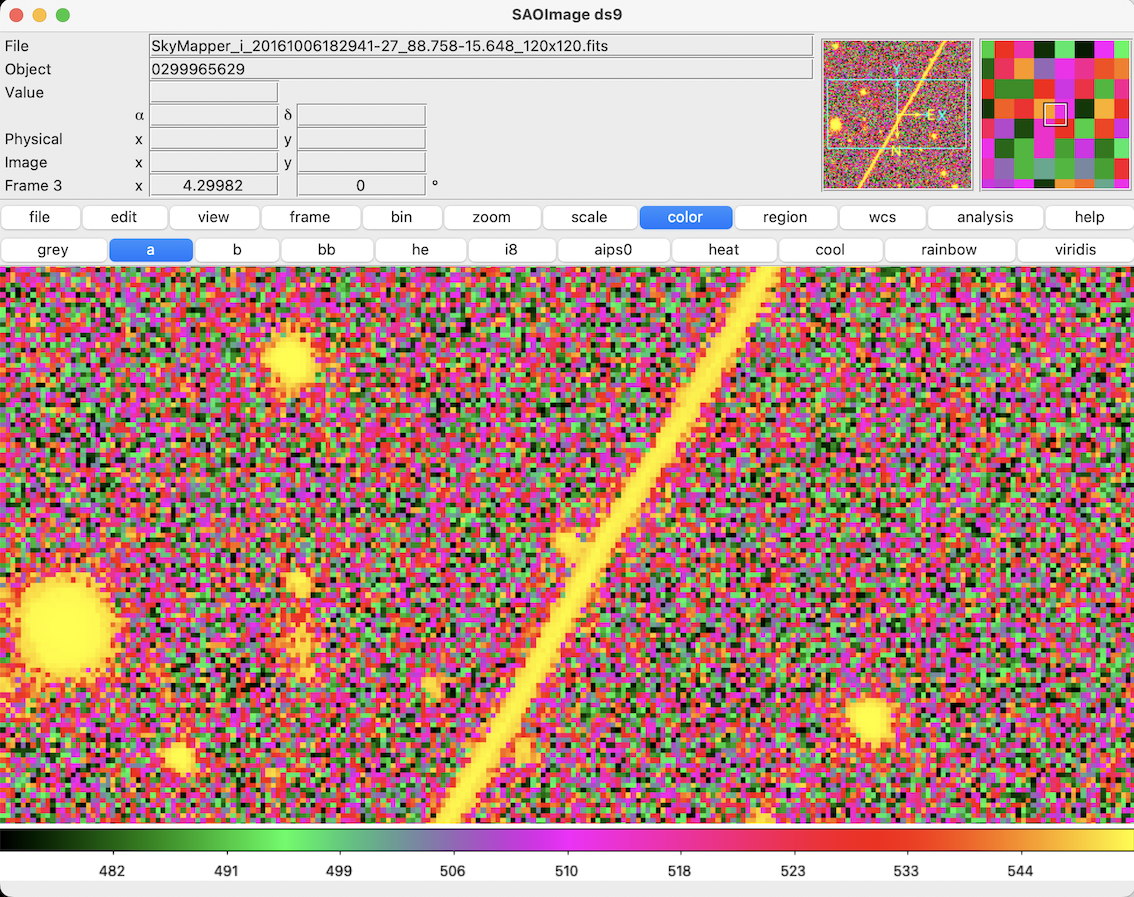

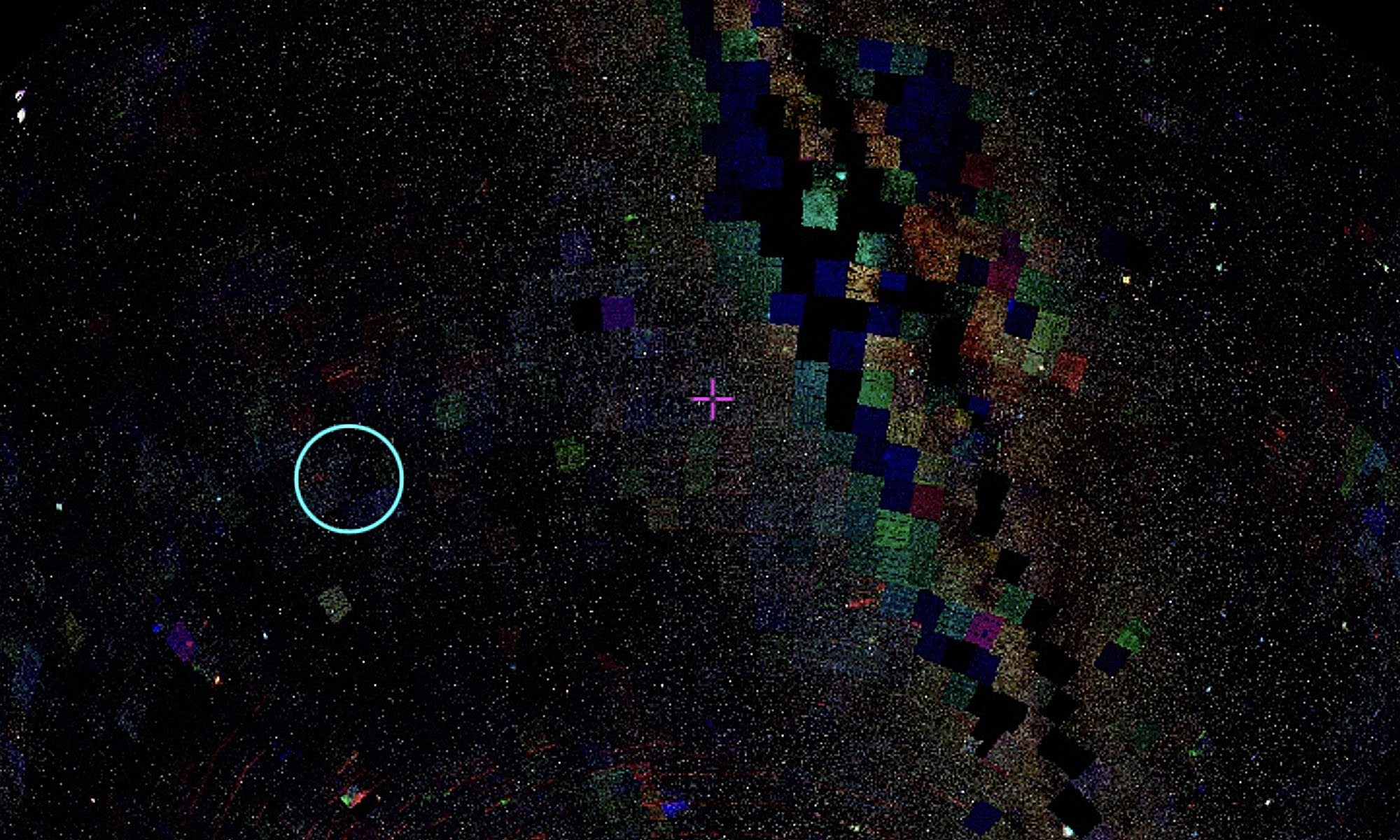

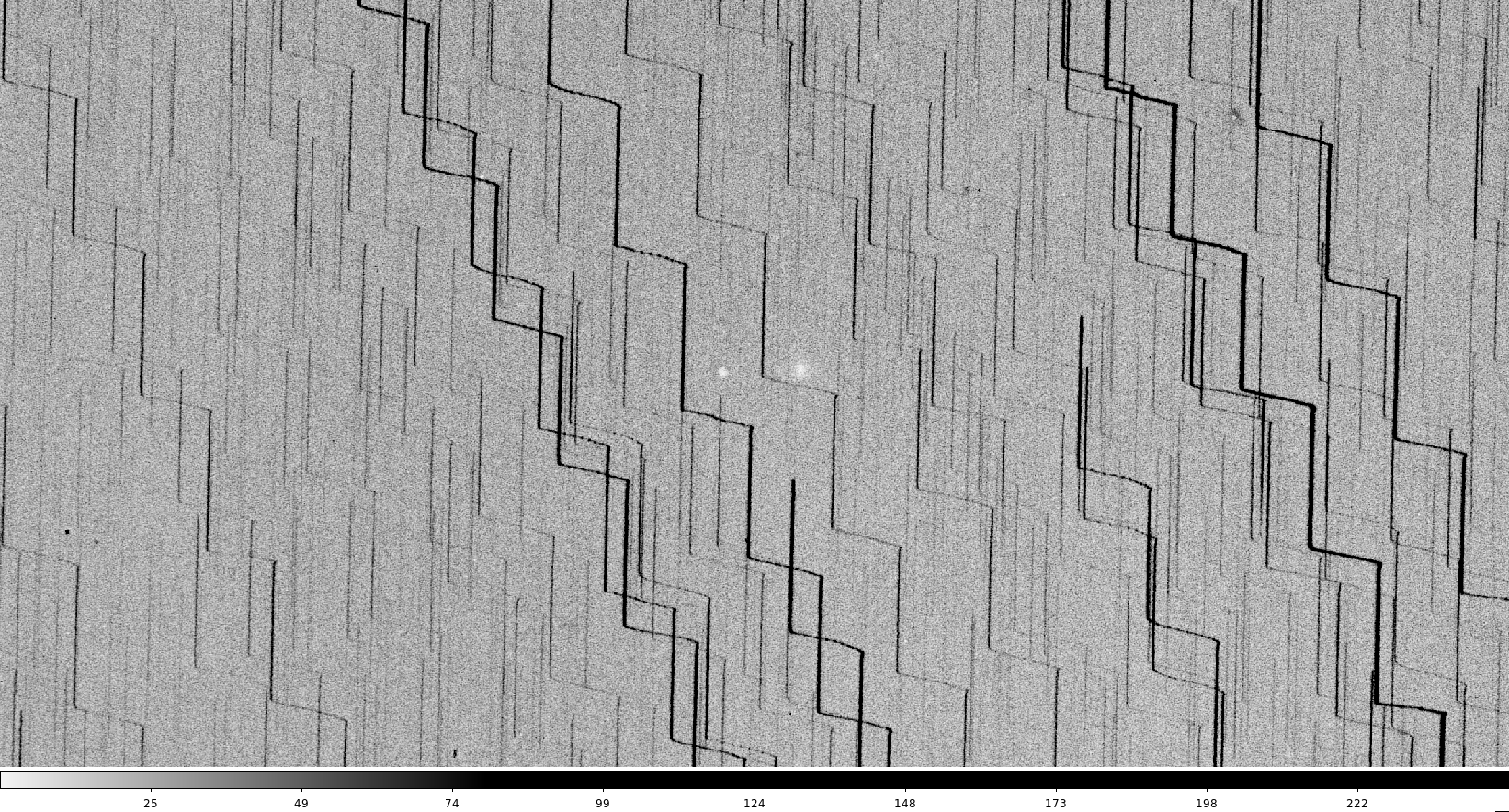

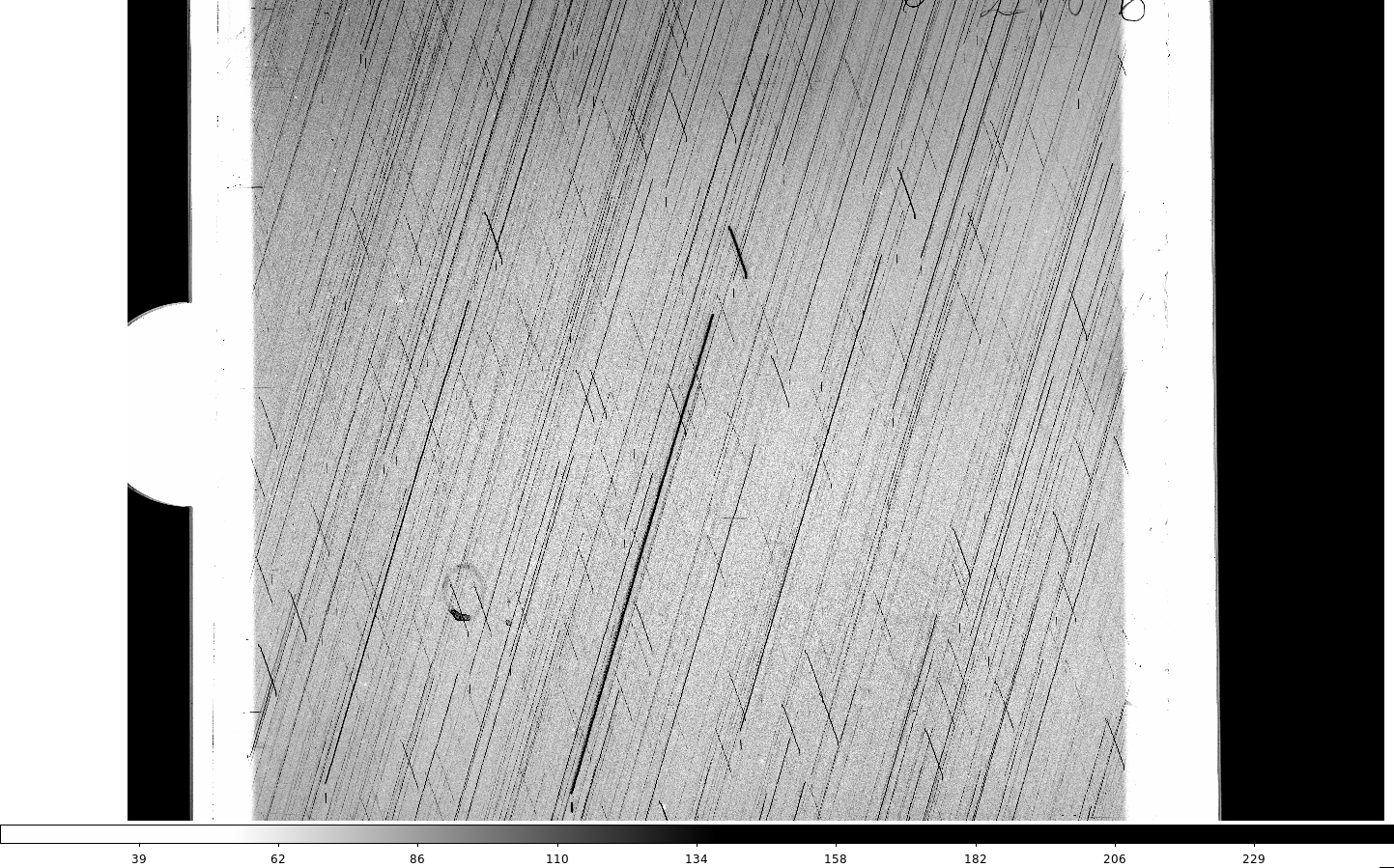

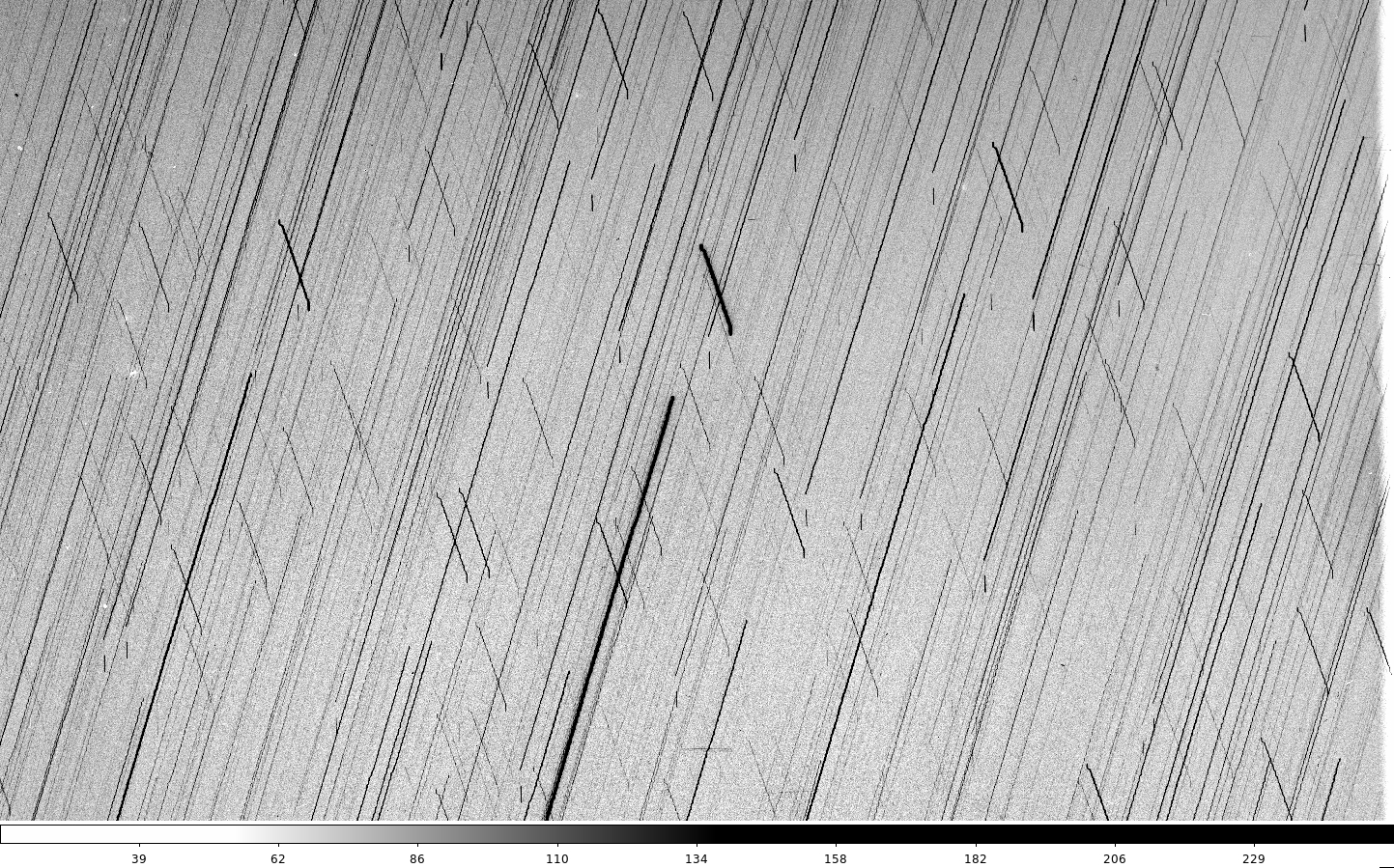

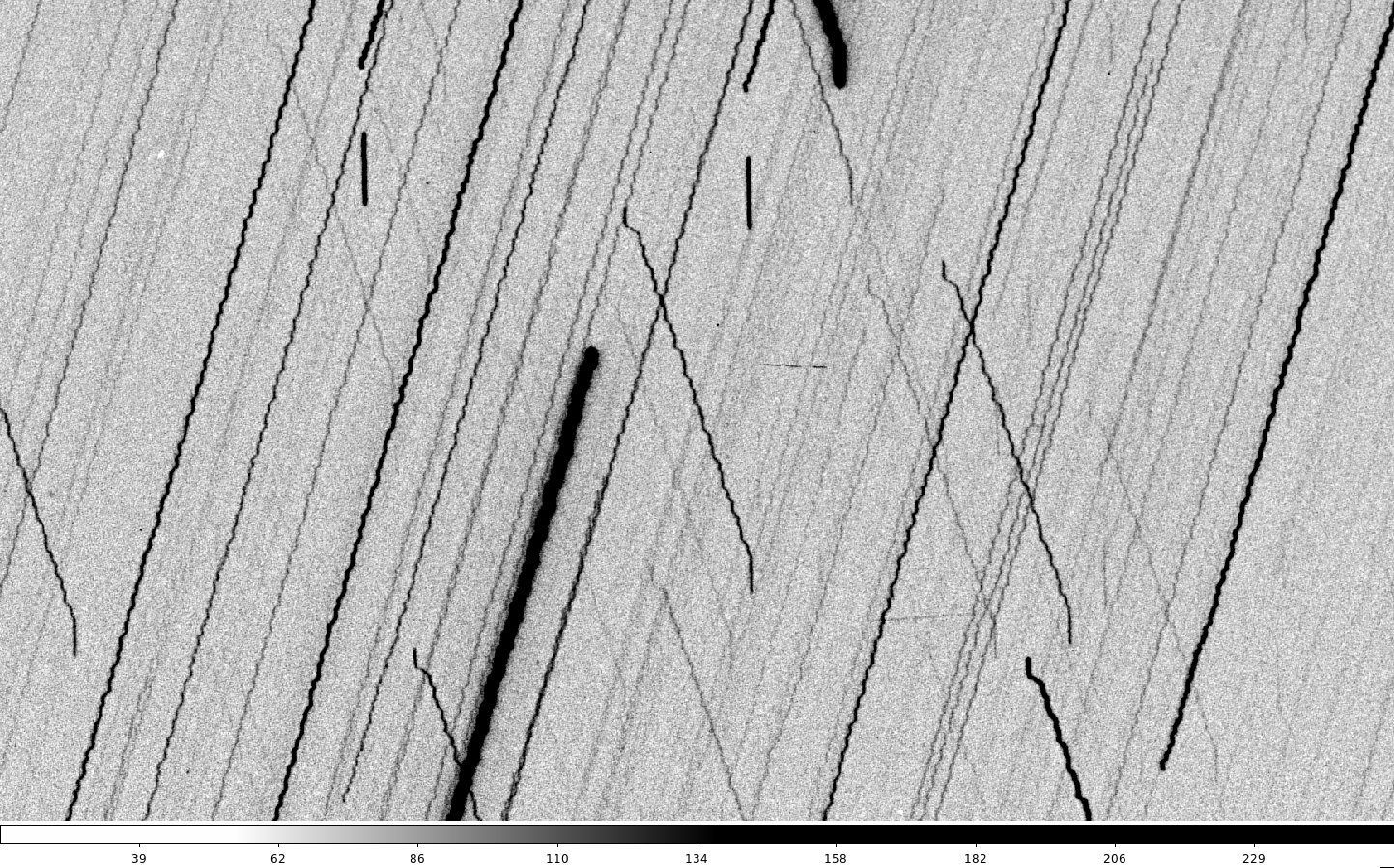

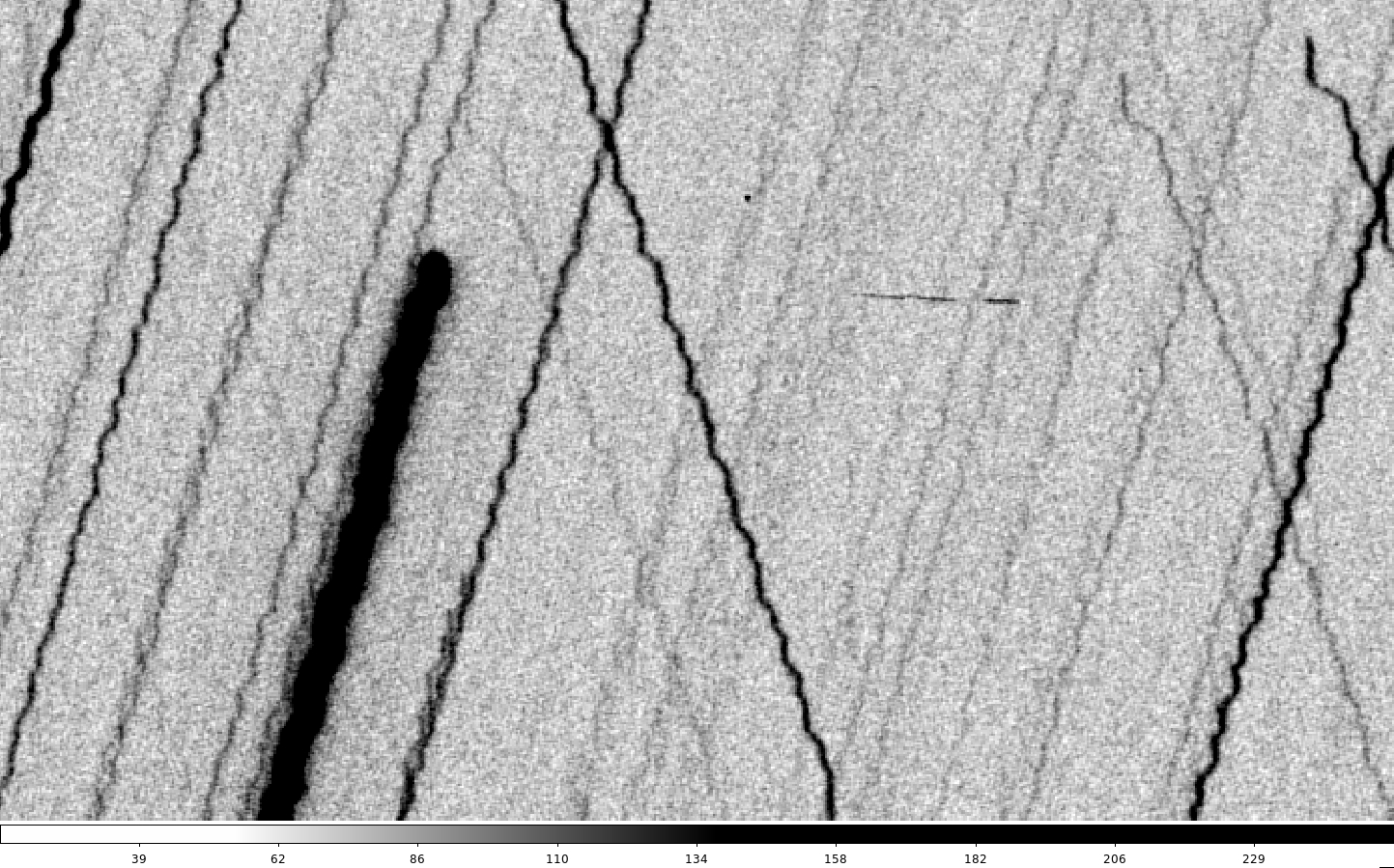

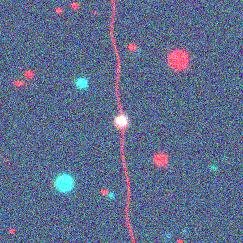

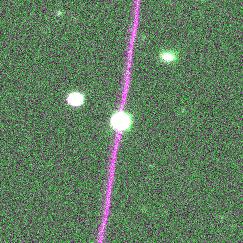

These are two contaminated SkyMapper cutouts converted in this way:

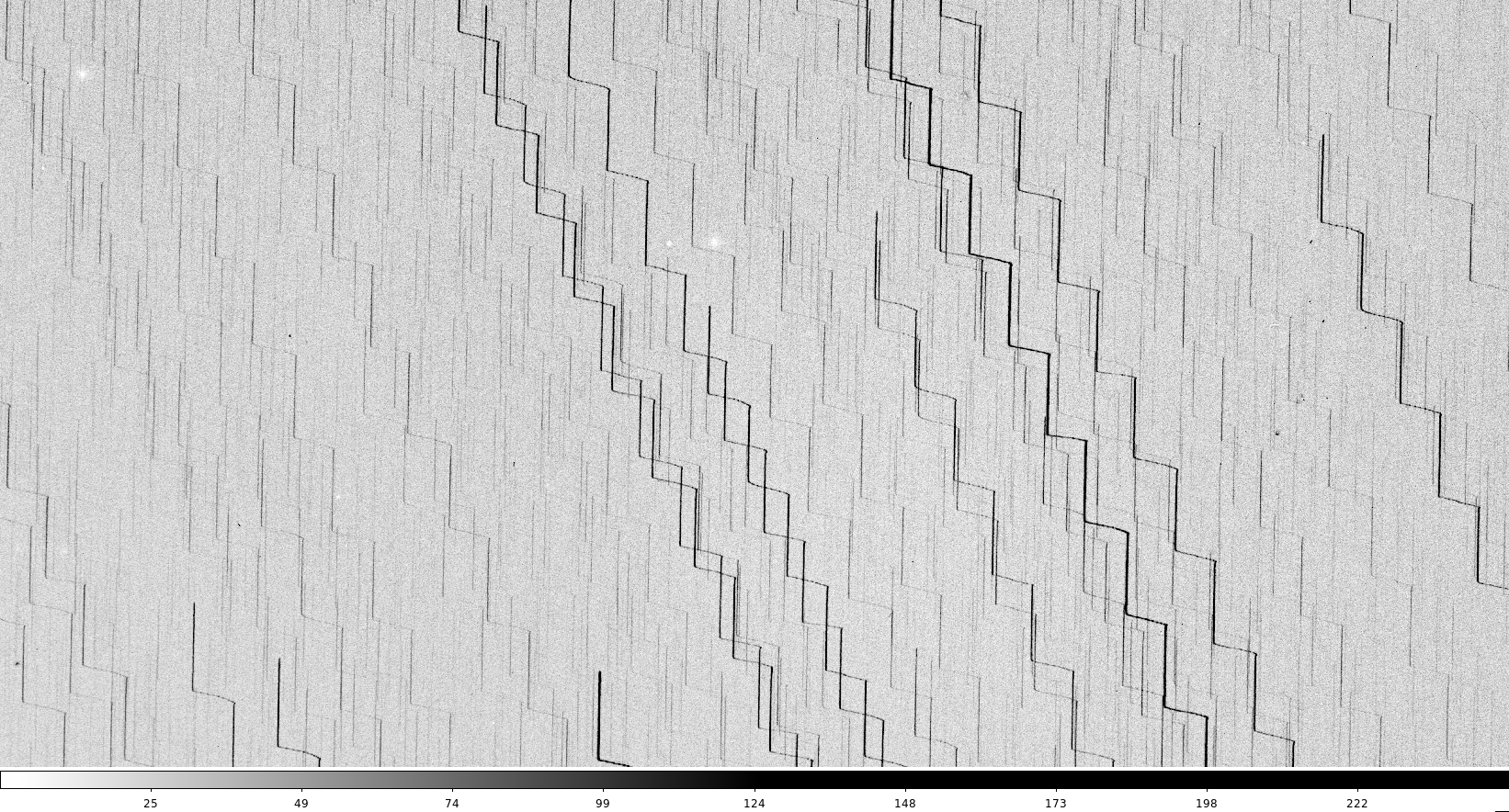

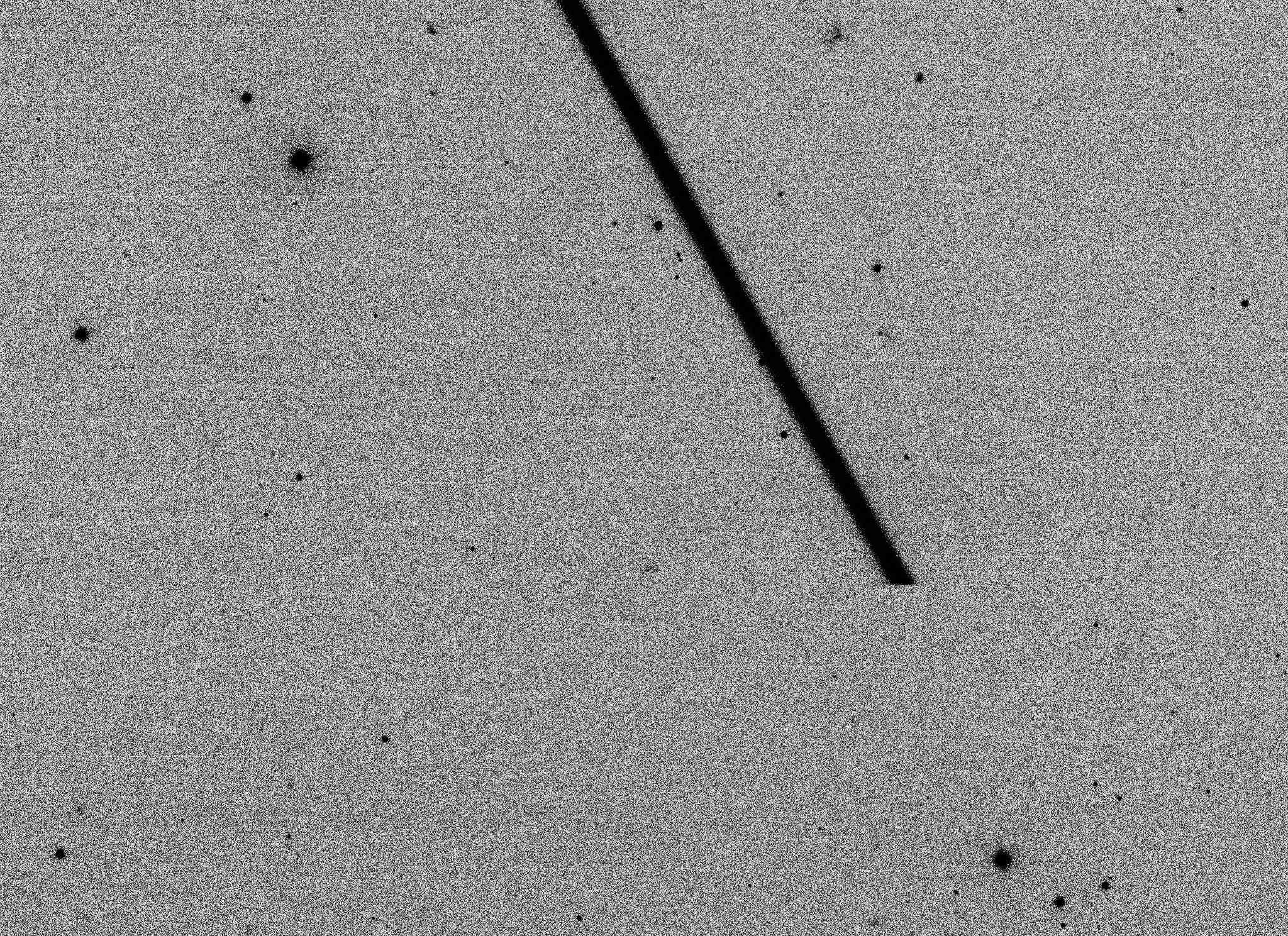

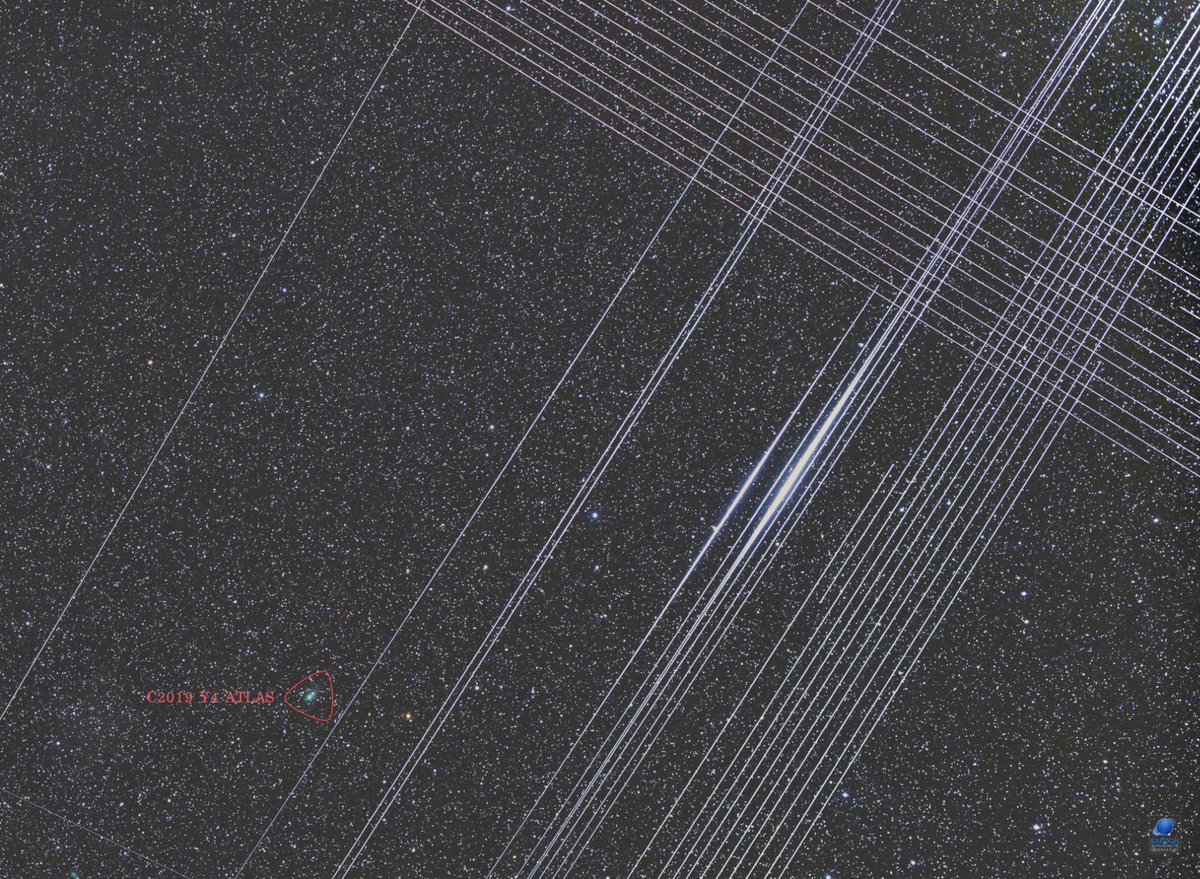

And here are some more made from simply messing around with a new imaging format with no rhyme or reason: